Heteroscedastic models

Jan Vávra

Exercise 10

Download this R markdown as: R, Rmd.

Outline of this lab session:

- Statistical inference in heteroscedastic model

- General linear model (known variance structure)

- Heteroscedastic model with unknown structure

The idea is to consider a general version of the linear regression model where \[ \boldsymbol{Y} \sim \mathsf{N}(\mathbb{X}\boldsymbol{\beta}, \sigma^2 \mathbb{W}^{-1}), \] where \(\boldsymbol{Y} = (Y_1, \dots, Y_n)^\top\) is the response vector for \(n \in \mathbb{N}\) independent subjects, \(\mathbb{X}\) is the corresponding model matrix (of a full rank \(p \in \mathbb{N}\)) and \(\boldsymbol{\beta} \in \mathbb{R}^p\) is the vector of the unknown parameters.

Note, that the normality assumption above is only used as a simplification but it is not needed to fit the model (without normality the results and the inference will hold asymptotically).

Considering the variance structure, reflected in the matrix \(\mathbb{W} \in \mathbb{R}^{n \times n}\) (a diagonal matrix because the subjects are mutually independent) can can distinguish two cases:

- the variance matrix \(\mathbb{W}\) is a known matrix,

- the variance matrix \(\mathbb{W}\) is an unknown matrix.

These cases lead to the so-called

- general linear model (not a generalized linear model, which is a different class of models)

- and a regression model under heteroscedastic errors.

Both cases will be briefly addressed below.

1. General linear model

library("mffSM") # Hlavy, plotLM()

library("colorspace") # color palettes

library("MASS") # sdres()

library("sandwich") # vcovHC()

library("lmtest") # coeftest()

data(Hlavy, package = "mffSM")We start with the dataset Hlavy (heads) which represents

head sizes of fetuses (from an ultrasound monitoring) in different

periods of pregnancy. Each data value (row in the dataframe) provides an

average over \(n_i \in \mathbb{N}\)

measurements of \(n_i\) independent

subjects (mothers).

The information provided in the data stand for the:

iserial number,tweek of the pregnancy,nnumber of measurements used to calculate the average head size,ythe average head size obtained from the particular number of measurements.

head(Hlavy)## i t n y

## 1 1 16 2 5.100

## 2 2 18 3 5.233

## 3 3 19 9 4.744

## 4 4 20 10 5.110

## 5 5 21 11 5.236

## 6 6 22 20 5.740dim(Hlavy)## [1] 26 4summary(Hlavy)## i t n y

## Min. : 1.00 Min. :16.0 Min. : 2.0 Min. :4.74

## 1st Qu.: 7.25 1st Qu.:23.2 1st Qu.:20.2 1st Qu.:5.87

## Median :13.50 Median :29.5 Median :47.0 Median :7.78

## Mean :13.50 Mean :29.5 Mean :39.9 Mean :7.46

## 3rd Qu.:19.75 3rd Qu.:35.8 3rd Qu.:60.0 3rd Qu.:8.98

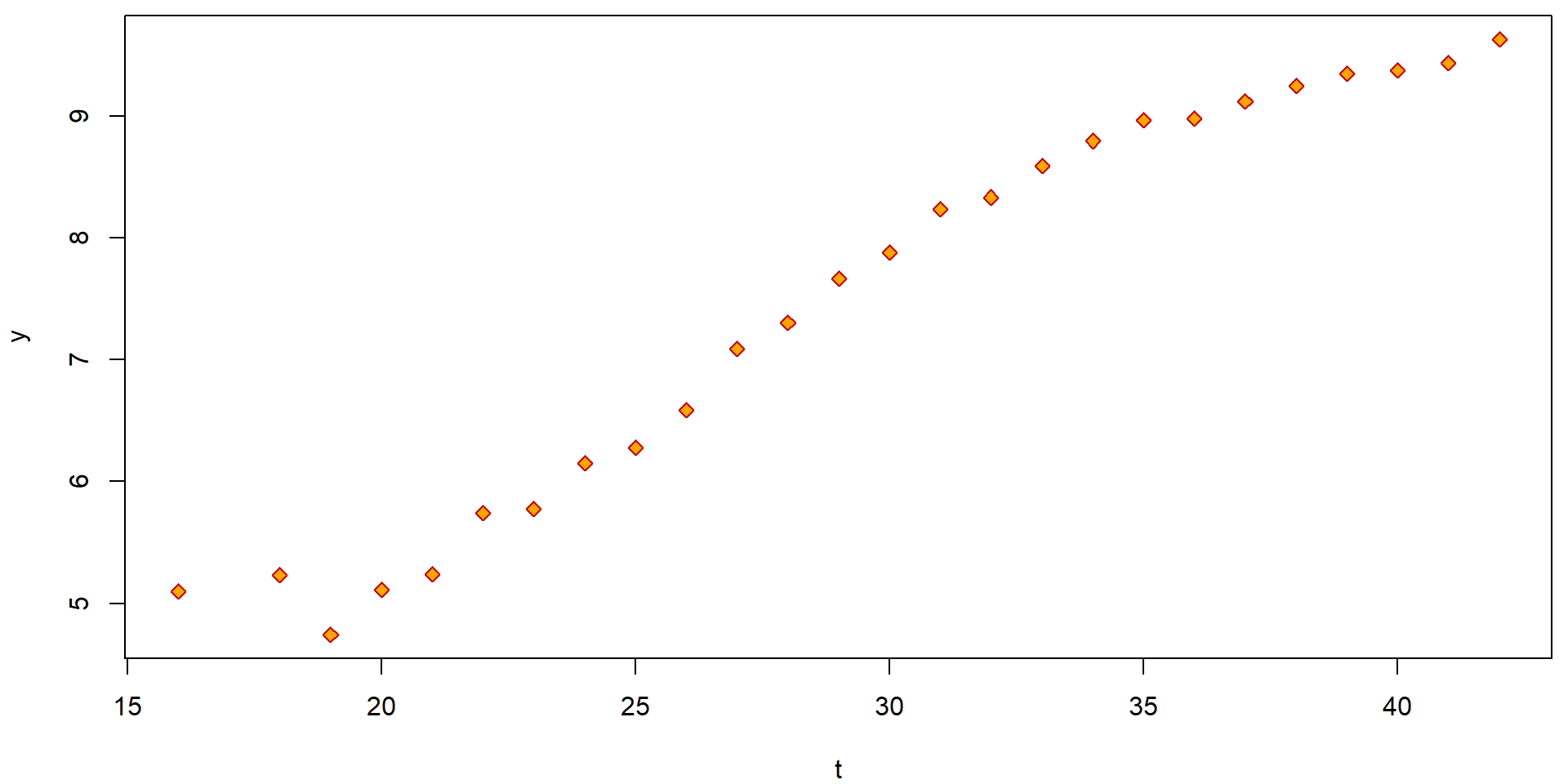

## Max. :26.00 Max. :42.0 Max. :85.0 Max. :9.63par(mfrow = c(1,1), mar = c(4,4,0.5,0.5))

plot(y ~ t, data = Hlavy, pch = 23, col = "red3", bg = "orange")

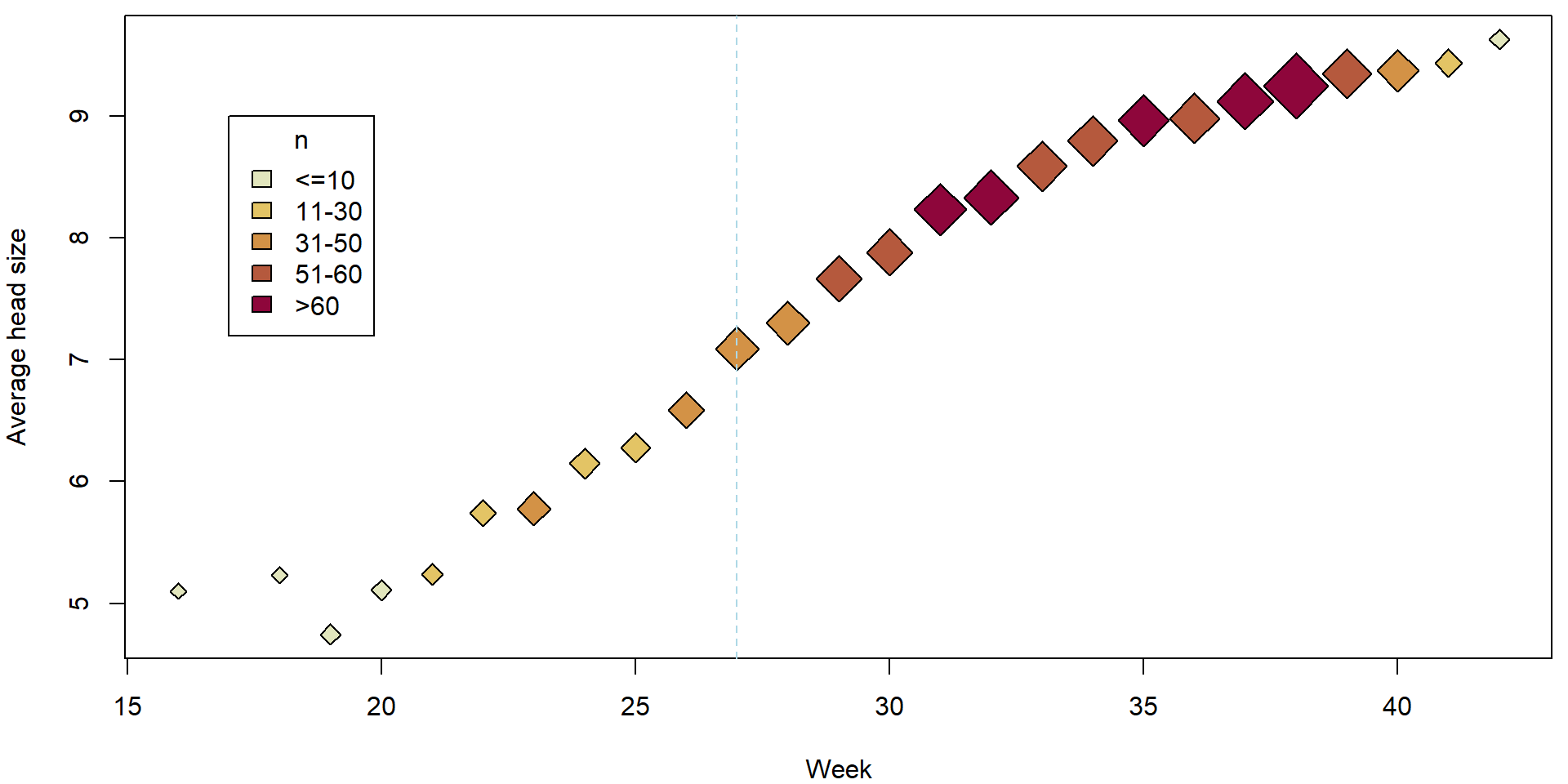

The simple scatterplot above can be, however, substantially improved by accounting also for reliability of each observation (higher number of measurements used to obtain the average also means higher reliability). This can be obtain, for instance, by the following code:

with(Hlavy, quantile(n, prob = seq(0, 1, by = 0.2)))## 0% 20% 40% 60% 80% 100%

## 2 11 31 53 60 85Hlavy <- transform(Hlavy,

fn = cut(n, breaks = c(0, 10, 30, 50, 60, 90),

labels = c("<=10", "11-30", "31-50", "51-60", ">60")),

cexn = n/25+1)

with(Hlavy, summary(fn))## <=10 11-30 31-50 51-60 >60

## 5 5 5 6 5plotHlavy <- function(){

PAL <- rev(heat_hcl(nlevels(Hlavy[, "fn"]), c.=c(80, 30), l=c(30, 90), power=c(1/5, 1.3)))

names(PAL) <- levels(Hlavy[, "fn"])

plot(y ~ t, data = Hlavy,

xlab = "Week", ylab = "Average head size",

pch = 23, col = "black", bg = PAL[fn], cex = cexn)

legend(17, 9, legend = levels(Hlavy[, "fn"]), title = "n", fill = PAL)

abline(v = 27, col = "lightblue", lty = 2)

}

par(mfrow = c(1,1), mar = c(4,4,0.5,0.5))

plotHlavy()

It is reasonable to assume, that for each independent measurement \(\widetilde{Y}_{ij}\) we have some model of the form \[ \widetilde{Y}_{ij} | \boldsymbol{X}_{i} \sim \mathsf{N}(\boldsymbol{X}_{i}^\top\boldsymbol{\beta}, \sigma^2) \] where for each \(j \in \{1, \ldots, n_i\}\) these observations are measured under the same set of covariates \(\mathbf{X_i}\). This is a homoscedastic model.

Unfortunately, we do not observe \(\widetilde{Y}_{ij}\) directly, but, instead, we only observe the overall average \(Y_i = \frac{1}{n_i}\sum_{j = 1}^{n_i} \widetilde{Y}_{ij}\).

It also holds, that \[ Y_i \sim \mathsf{N}(\boldsymbol{X}_i^\top\boldsymbol{\beta}, \sigma^2/{n_i}), \] or, considering all data together, we have \[ \boldsymbol{Y} \sim \mathsf{N}_n(\mathbb{X}\boldsymbol{\beta}, \sigma^2 \mathbb{W}^{-1}), \] where \(\mathbb{W}^{-1}\) is a diagonal matrix \(\mathbb{W}^{-1} = \mathsf{diag}(1/n_1, \dots, 1/n_n)\) (thus, the variance structure is known from the design of the experiment). The matrix \(\mathbb{W} = \mathsf{diag}(n_1, \dots, n_n)\) is the weight matrix which gives each \(Y_i\) the weight corresponding to number of observations it has been obtained from.

In addition, practical motivation behind the model is the following:

Practitioners say that the relationship y ~ t could be

described by a piecewise quadratic function with t = 27

being a point where the quadratic relationship changes its shape.

From the practical point of view, the fitted curve should be

continuous at t = 27 and perhaps also

smooth (i.e., with continuous at least the first

derivative) as it is biologically not defensible to claim that at

t = 27 the growth has a jump in the speed (rate) of the

growth.

As far as different observations have different credibility, we will use the general linear model with the known matrix \(\mathbb{W}\). The model can be fitted as follows:

Hlavy <- transform(Hlavy, t27 = t - 27)

summary(mCont <- lm(y ~ t27 + I(t27^2) + (t27 > 0):t27 + (t27 > 0):I(t27^2),

weights = n, data = Hlavy))##

## Call:

## lm(formula = y ~ t27 + I(t27^2) + (t27 > 0):t27 + (t27 > 0):I(t27^2),

## data = Hlavy, weights = n)

##

## Weighted Residuals:

## Min 1Q Median 3Q Max

## -0.8821 -0.3522 0.0123 0.3792 0.8532

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 7.04975 0.04242 166.17 < 2e-16 ***

## t27 0.38118 0.03029 12.59 3.0e-11 ***

## I(t27^2) 0.01621 0.00394 4.11 0.0005 ***

## t27:t27 > 0TRUE -0.06353 0.03943 -1.61 0.1220

## I(t27^2):t27 > 0TRUE -0.02679 0.00378 -7.09 5.4e-07 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 0.477 on 21 degrees of freedom

## Multiple R-squared: 0.997, Adjusted R-squared: 0.996

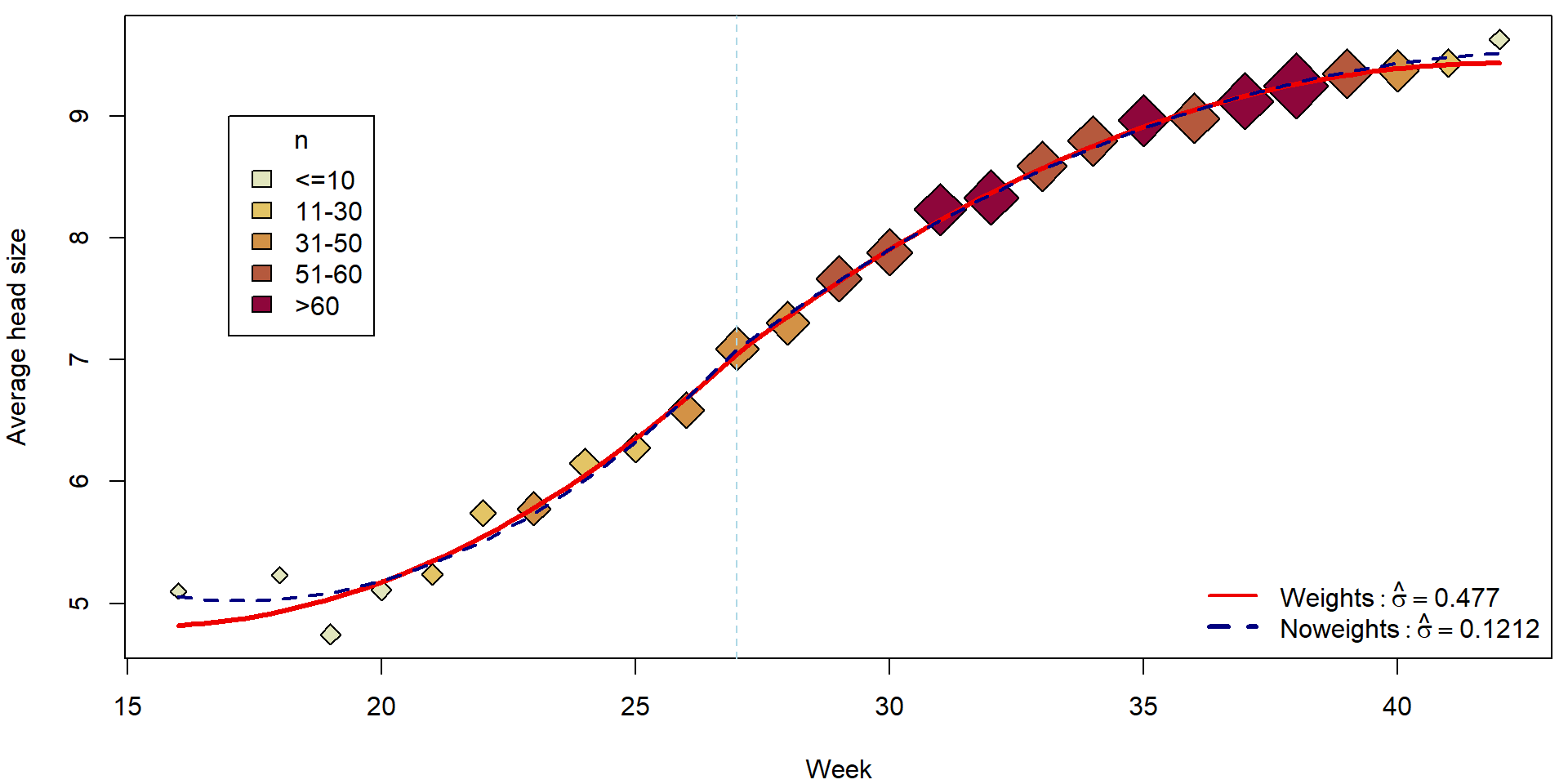

## F-statistic: 1.65e+03 on 4 and 21 DF, p-value: <2e-16Compare to the naive (and incorrect) model where the weights are ignored:

mCont_noweights <- lm(y ~ t27 + I(t27^2) + (t27 > 0):t27 + (t27 > 0):I(t27^2), data = Hlavy)

summary(mCont_noweights)##

## Call:

## lm(formula = y ~ t27 + I(t27^2) + (t27 > 0):t27 + (t27 > 0):I(t27^2),

## data = Hlavy)

##

## Residuals:

## Min 1Q Median 3Q Max

## -0.3439 -0.0575 -0.0173 0.0535 0.2285

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 7.08435 0.06901 102.65 < 2e-16 ***

## t27 0.42294 0.03263 12.96 1.7e-11 ***

## I(t27^2) 0.02167 0.00311 6.97 7.0e-07 ***

## t27:t27 > 0TRUE -0.12116 0.04926 -2.46 0.023 *

## I(t27^2):t27 > 0TRUE -0.03098 0.00292 -10.59 7.0e-10 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 0.121 on 21 degrees of freedom

## Multiple R-squared: 0.996, Adjusted R-squared: 0.995

## F-statistic: 1.17e+03 on 4 and 21 DF, p-value: <2e-16Fitted curves:

tgrid <- seq(16, 42, length = 100)

pdata <- data.frame(t27 = tgrid - 27)

fitCont <- predict(mCont, newdata = pdata)

par(mfrow = c(1,1), mar = c(4,4,0.5,0.5))

plotHlavy()

lines(tgrid, fitCont, col = "red2", lwd = 3)

lines(tgrid, predict(mCont_noweights, newdata = pdata), col = "navyblue", lwd = 2, lty = 2)

legend("bottomright", bty = "n",

legend = c(bquote(Weights: hat(sigma) == .(summary(mCont)$sigma)),

bquote(Noweights: hat(sigma) == .(summary(mCont_noweights)$sigma))),

col = c("red2", "navyblue"), lty = c(1,2), lwd = c(2,3))

Individual work

- What is estimated by the model above? Interpret the estimated parameters.

- Can you manually reconstruct the estimated values for the mean structure and, also, the estimated values for the variance parameters?

### Key matrices

W <- diag(Hlavy$n)

Y <- Hlavy$y

X <- model.matrix(mCont)

### Estimated regression coefficients

c(betahat <- solve(t(X)%*%W%*%X)%*%(t(X)%*%W%*%Y))## [1] 7.04975 0.38118 0.01621 -0.06353 -0.02679coef(mCont)## (Intercept) t27 I(t27^2) t27:t27 > 0TRUE I(t27^2):t27 > 0TRUE

## 7.04975 0.38118 0.01621 -0.06353 -0.02679### Generalized fitted values

c(Yg <- X%*%betahat)## [1] 4.819 4.933 5.038 5.176 5.346 5.549 5.784 6.052 6.352 6.685 7.050 7.357 7.643 7.908 8.151 8.374 8.575

## [18] 8.755 8.914 9.052 9.169 9.265 9.339 9.393 9.425 9.436fitted(mCont)## 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18

## 4.819 4.933 5.038 5.176 5.346 5.549 5.784 6.052 6.352 6.685 7.050 7.357 7.643 7.908 8.151 8.374 8.575 8.755

## 19 20 21 22 23 24 25 26

## 8.914 9.052 9.169 9.265 9.339 9.393 9.425 9.436### Generalized residual sum of squares

(RSS <- t(Y - Yg)%*%W%*%(Y - Yg))## [,1]

## [1,] 4.778### Generalized mean square

RSS/(length(Y)-ncol(X))## [,1]

## [1,] 0.2275(summary(mCont)$sigma)^2## [1] 0.2275### Be careful that Multiple R-squared: 0.9968 reported in the output "summary(mCont)" is not equal to

sum((Yg-mean(Yg))^2)/sum((Y-mean(Y))^2)## [1] 1.006### Instead, it equals the following quantity that is more difficult to interpret

barYw <- sum(Y*Hlavy$n)/sum(Hlavy$n)

### weighted mean of the original observations

### something as "generalized" regression sum of squares

SSR <- sum((Yg - barYw)^2 * Hlavy$n)

### something as "generalized" total variance

SST <- sum((Y - barYw)^2 * Hlavy$n)

### "generalized" R squared

SSR/SST## [1] 0.9968summary(mCont)$r.squared## [1] 0.9968- Compare the general linear model above with the simple model below. What are the main differences between these two models? Can you explain them?

iii <- rep(1:nrow(Hlavy), Hlavy$n)

Hlavy2 <- Hlavy[iii,] # new dataset

summary(mCont2 <- lm(y ~ t27 + I(t27^2) + (t27 > 0):t27 + (t27 > 0):I(t27^2), data = Hlavy2))##

## Call:

## lm(formula = y ~ t27 + I(t27^2) + (t27 > 0):t27 + (t27 > 0):I(t27^2),

## data = Hlavy2)

##

## Residuals:

## Min 1Q Median 3Q Max

## -0.2940 -0.0450 -0.0147 0.0413 0.3005

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 7.049749 0.006055 1164.3 <2e-16 ***

## t27 0.381177 0.004323 88.2 <2e-16 ***

## I(t27^2) 0.016214 0.000563 28.8 <2e-16 ***

## t27:t27 > 0TRUE -0.063531 0.005627 -11.3 <2e-16 ***

## I(t27^2):t27 > 0TRUE -0.026786 0.000539 -49.7 <2e-16 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 0.0681 on 1031 degrees of freedom

## Multiple R-squared: 0.997, Adjusted R-squared: 0.997

## F-statistic: 8.11e+04 on 4 and 1031 DF, p-value: <2e-16summary(mCont)##

## Call:

## lm(formula = y ~ t27 + I(t27^2) + (t27 > 0):t27 + (t27 > 0):I(t27^2),

## data = Hlavy, weights = n)

##

## Weighted Residuals:

## Min 1Q Median 3Q Max

## -0.8821 -0.3522 0.0123 0.3792 0.8532

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 7.04975 0.04242 166.17 < 2e-16 ***

## t27 0.38118 0.03029 12.59 3.0e-11 ***

## I(t27^2) 0.01621 0.00394 4.11 0.0005 ***

## t27:t27 > 0TRUE -0.06353 0.03943 -1.61 0.1220

## I(t27^2):t27 > 0TRUE -0.02679 0.00378 -7.09 5.4e-07 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 0.477 on 21 degrees of freedom

## Multiple R-squared: 0.997, Adjusted R-squared: 0.996

## F-statistic: 1.65e+03 on 4 and 21 DF, p-value: <2e-162. Model with heteroscedastic errors (sandwich estimate)

For a general heteroscedastic model it is usually

assumed that

\[

Y_i | \boldsymbol{X}_i \sim

\mathsf{N}(\boldsymbol{X}_i^\top\boldsymbol{\beta},

\sigma^2(\boldsymbol{X}_i))

\] where \(\sigma^2(\cdot)\) is

some positive (unknown) function of the response variables \(\boldsymbol{X}_i \in \mathbb{R}^p\). Note,

that the normality assumption is here only given for simplification but

it is not required.

In the following, we will consider the dataset that comes from the

project MANA (Maturita na necisto) launched in the Czech Republic in

2005. This project was a part of a bigger project whose goal was to

prepare a new form of graduation exam (“statni maturita”).

This dataset contains the results in English language at grammar

schools.

load(url("http://msekce.karlin.mff.cuni.cz/~vavraj/nmfm334/data/mana.RData"))The variables in the data are:

region(1 = Praha; 2 = Stredocesky; 3 = Jihocesky; 4 = Plzensky; 5 = Karlovarsky; 6 = Ustecky; 7 = Liberecky; 8 = Kralovehradecky; 9 = Pardubicky; 10 = Vysocina; 11 = Jihomoravsky; 12 = Olomoucky; 13 = Zlinsky; 14 = Moravskoslezsky),specialization(1 = education; 2 = social science; 3 = languages; 4 = law; 5 = math-physics; 6 = engineering; 7 = science; 8 = medicine; 9 = economics; 10 = agriculture; 11 = art),gender(0 = female; 1 = male),graduation(0 = no; 1 = yes),entry.exam(0 = no; 1 = yes),score(score in English language in MANA test),avg.mark(average mark (grade) from all subjects at the last report card), andmark.eng(average mark (grade) from the English language at the last 5 report cards).

For better intuition, we can transform the data as follows:

mana <- transform(mana,

fregion = factor(region, levels = 1:14,

labels = c("A", "S", "C", "P", "K", "U", "L",

"H", "E", "J", "B", "M", "Z", "T")),

fspec = factor(specialization, levels = 1:11,

labels = c("Educ", "Social", "Lang", "Law",

"Mat-Phys", "Engin", "Science",

"Medic", "Econom", "Agric", "Art")),

fgender = factor(gender, levels = 0:1, labels = c("Female", "Male")),

fgrad = factor(graduation, levels = 0:1, labels = c("No", "Yes")),

fentry = factor(entry.exam, levels = 0:1, labels = c("No", "Yes")))

summary(mana)## region specialization gender graduation entry.exam score avg.mark

## Min. : 1.00 Min. : 1.0 Min. :0.000 Min. :0.000 Min. :0.000 Min. : 5 Min. :1.00

## 1st Qu.: 3.00 1st Qu.: 2.0 1st Qu.:0.000 1st Qu.:1.000 1st Qu.:0.000 1st Qu.:40 1st Qu.:1.48

## Median : 7.00 Median : 6.0 Median :0.000 Median :1.000 Median :0.000 Median :44 Median :1.64

## Mean : 7.46 Mean : 5.5 Mean :0.415 Mean :0.959 Mean :0.454 Mean :43 Mean :1.65

## 3rd Qu.:11.00 3rd Qu.: 8.0 3rd Qu.:1.000 3rd Qu.:1.000 3rd Qu.:1.000 3rd Qu.:48 3rd Qu.:1.81

## Max. :14.00 Max. :11.0 Max. :1.000 Max. :1.000 Max. :1.000 Max. :52 Max. :2.47

##

## mark.eng fregion fspec fgender fgrad fentry

## Min. :1.00 T : 923 Social : 855 Female:3044 No : 216 No :2843

## 1st Qu.:1.40 S : 665 Econom : 846 Male :2159 Yes:4987 Yes:2360

## Median :2.00 B : 634 Engin : 638

## Mean :2.18 A : 599 Medic : 564

## 3rd Qu.:2.80 L : 534 Science: 557

## Max. :4.20 C : 474 Educ : 513

## (Other):1374 (Other):1230Individual work

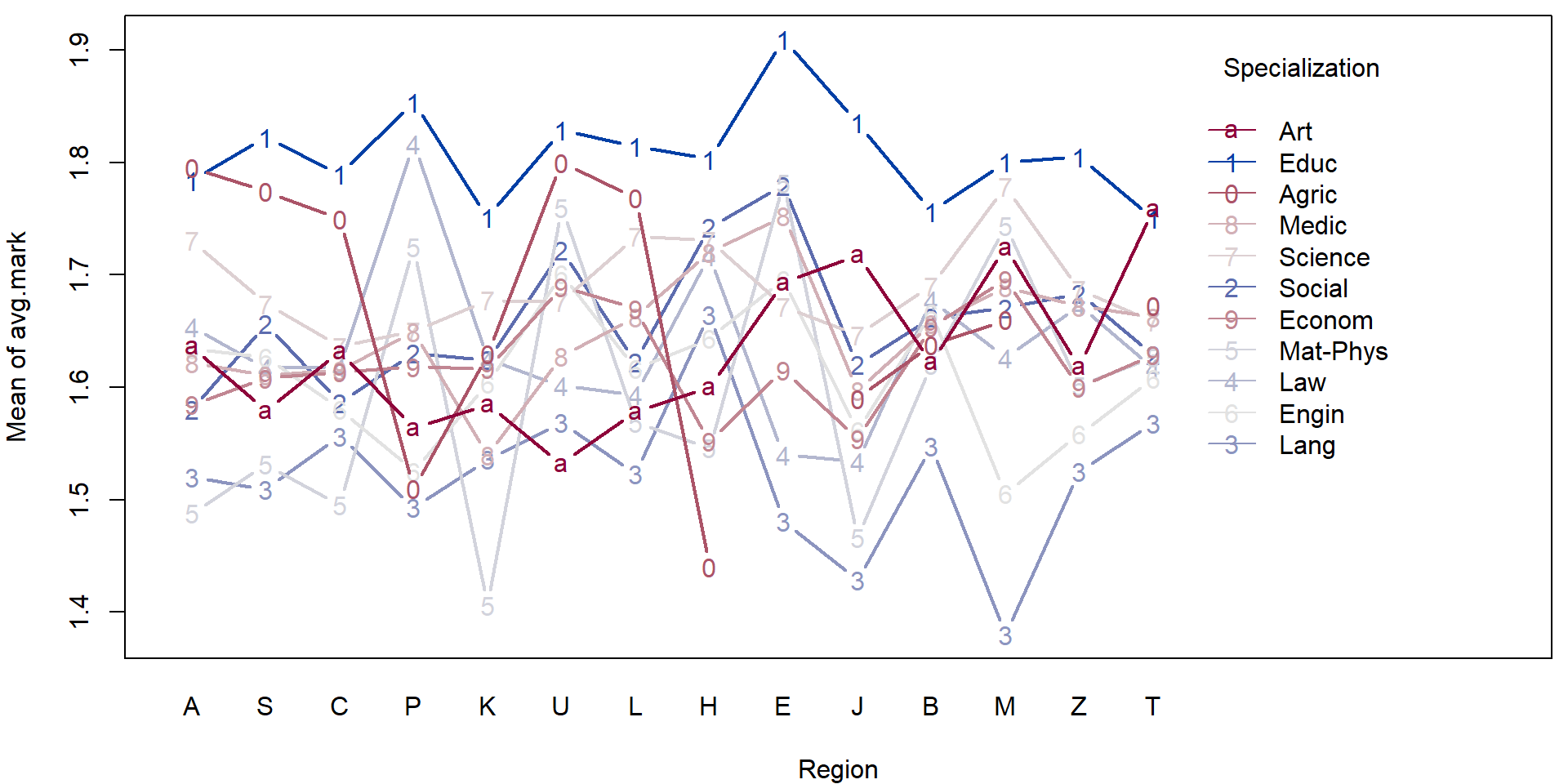

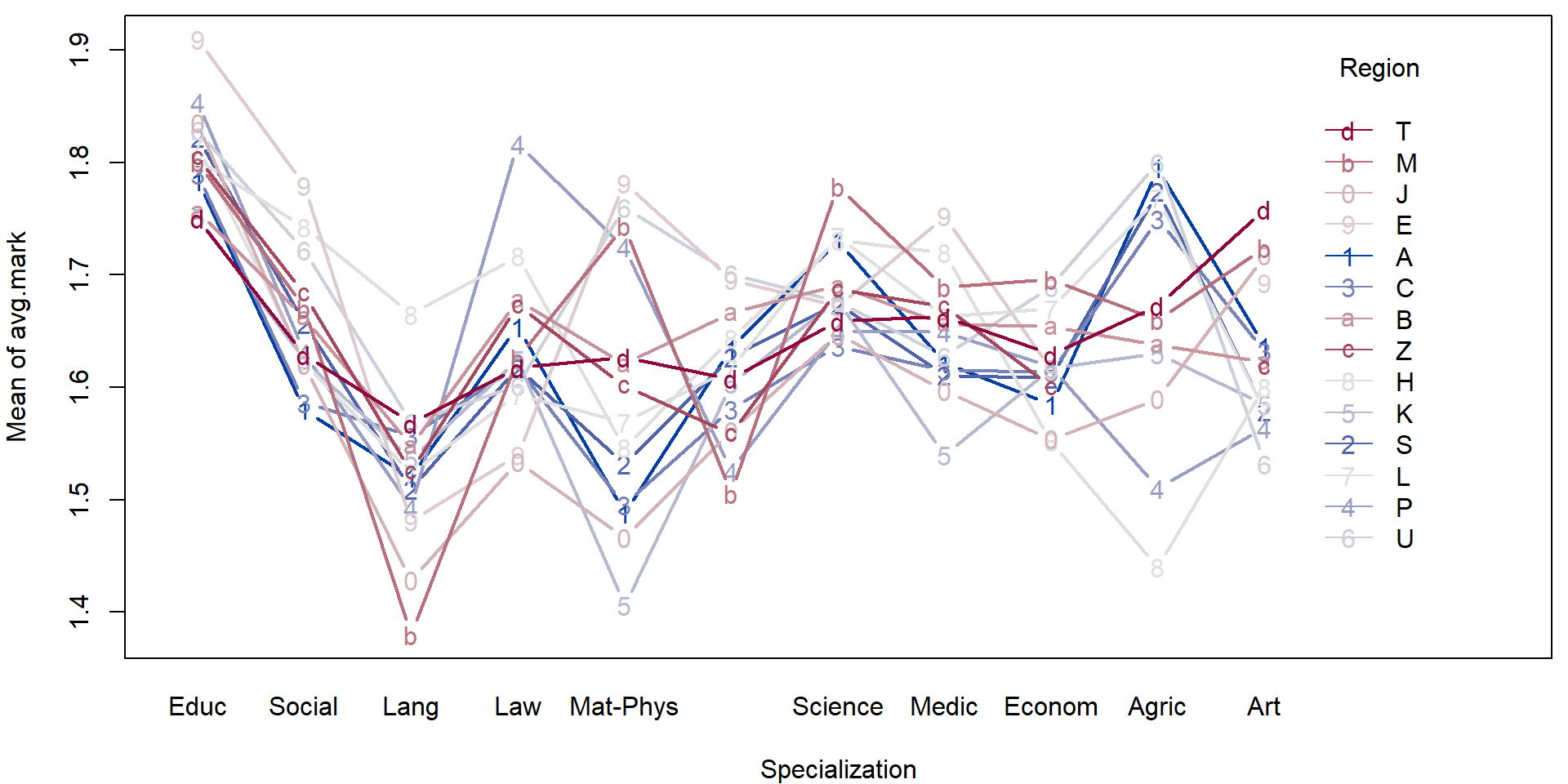

- Consider the transformed data above and perform a simple exploratory analysis (in terms of some simple sample characteristics and plots).

- Focus, in particular on the region in the Czech republic and the specialization.

For illustration purposes we will fit a simple (additive) model to explain the average mark given the region and the specialization.

mAddit <- lm(avg.mark ~ fregion + fspec, data = mana)

summary(mAddit)##

## Call:

## lm(formula = avg.mark ~ fregion + fspec, data = mana)

##

## Residuals:

## Min 1Q Median 3Q Max

## -0.6884 -0.1644 -0.0052 0.1562 0.8402

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 1.77616 0.01432 124.06 < 2e-16 ***

## fregionS 0.00887 0.01334 0.66 0.5063

## fregionC -0.01336 0.01455 -0.92 0.3585

## fregionP 0.02008 0.02197 0.91 0.3607

## fregionK -0.02198 0.02098 -1.05 0.2948

## fregionU 0.05678 0.02049 2.77 0.0056 **

## fregionL 0.02388 0.01411 1.69 0.0907 .

## fregionH 0.04861 0.02104 2.31 0.0209 *

## fregionE 0.07980 0.02049 3.89 1.0e-04 ***

## fregionJ -0.03138 0.01942 -1.62 0.1062

## fregionB 0.03236 0.01348 2.40 0.0164 *

## fregionM 0.02588 0.02159 1.20 0.2306

## fregionZ 0.01653 0.01880 0.88 0.3794

## fregionT 0.00846 0.01250 0.68 0.4984

## fspecSocial -0.15080 0.01325 -11.38 < 2e-16 ***

## fspecLang -0.26387 0.01700 -15.52 < 2e-16 ***

## fspecLaw -0.15020 0.01534 -9.79 < 2e-16 ***

## fspecMat-Phys -0.22192 0.01926 -11.52 < 2e-16 ***

## fspecEngin -0.17751 0.01403 -12.65 < 2e-16 ***

## fspecScience -0.10411 0.01448 -7.19 7.4e-13 ***

## fspecMedic -0.14368 0.01446 -9.94 < 2e-16 ***

## fspecEconom -0.16751 0.01330 -12.59 < 2e-16 ***

## fspecAgric -0.07115 0.03120 -2.28 0.0226 *

## fspecArt -0.15527 0.02030 -7.65 2.4e-14 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 0.236 on 5179 degrees of freedom

## Multiple R-squared: 0.0712, Adjusted R-squared: 0.0671

## F-statistic: 17.3 on 23 and 5179 DF, p-value: <2e-16par(mar = c(4,4,1.5,0.5))

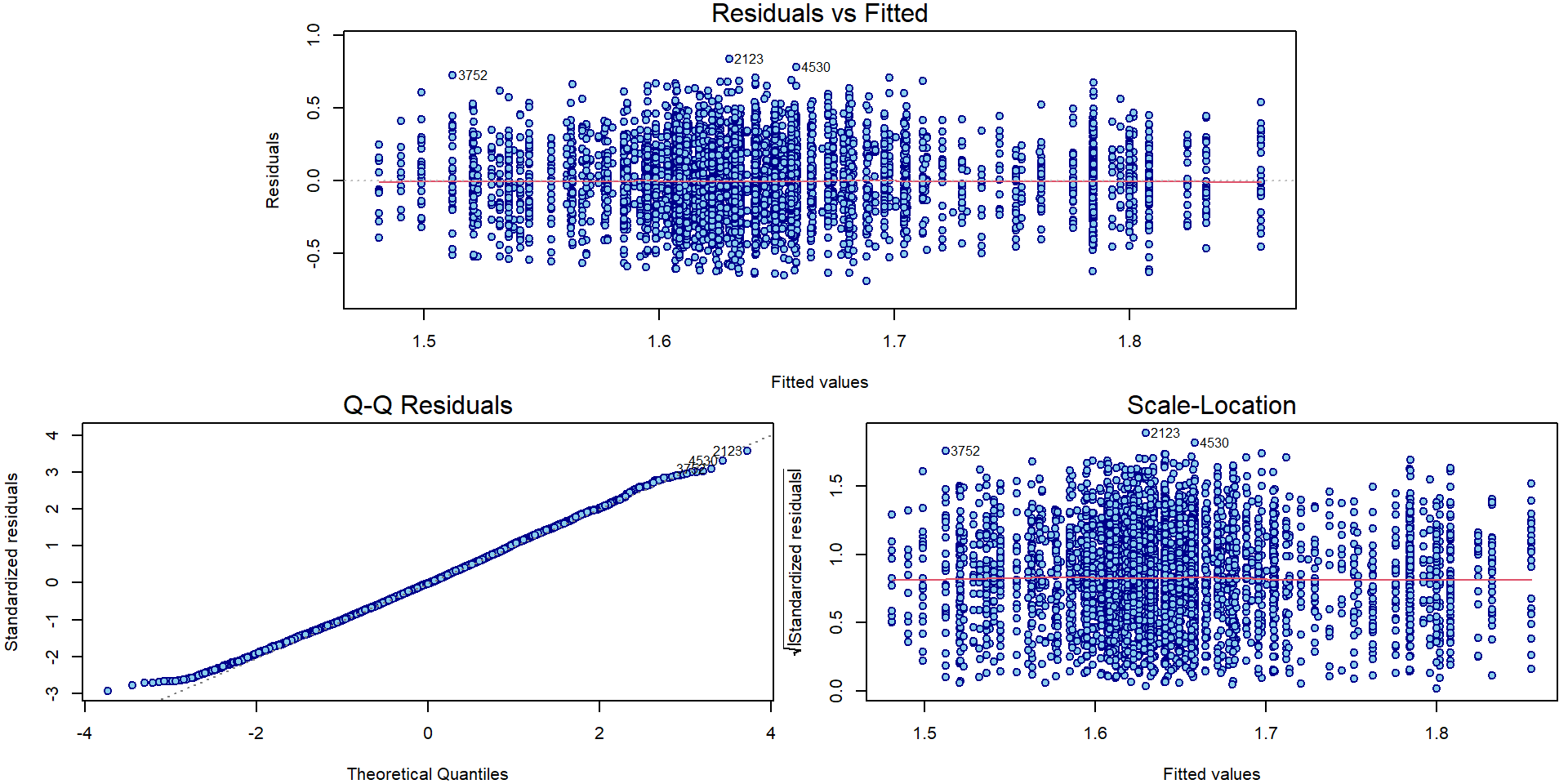

plotLM(mAddit) Could the variability vary with the region or school specialization?

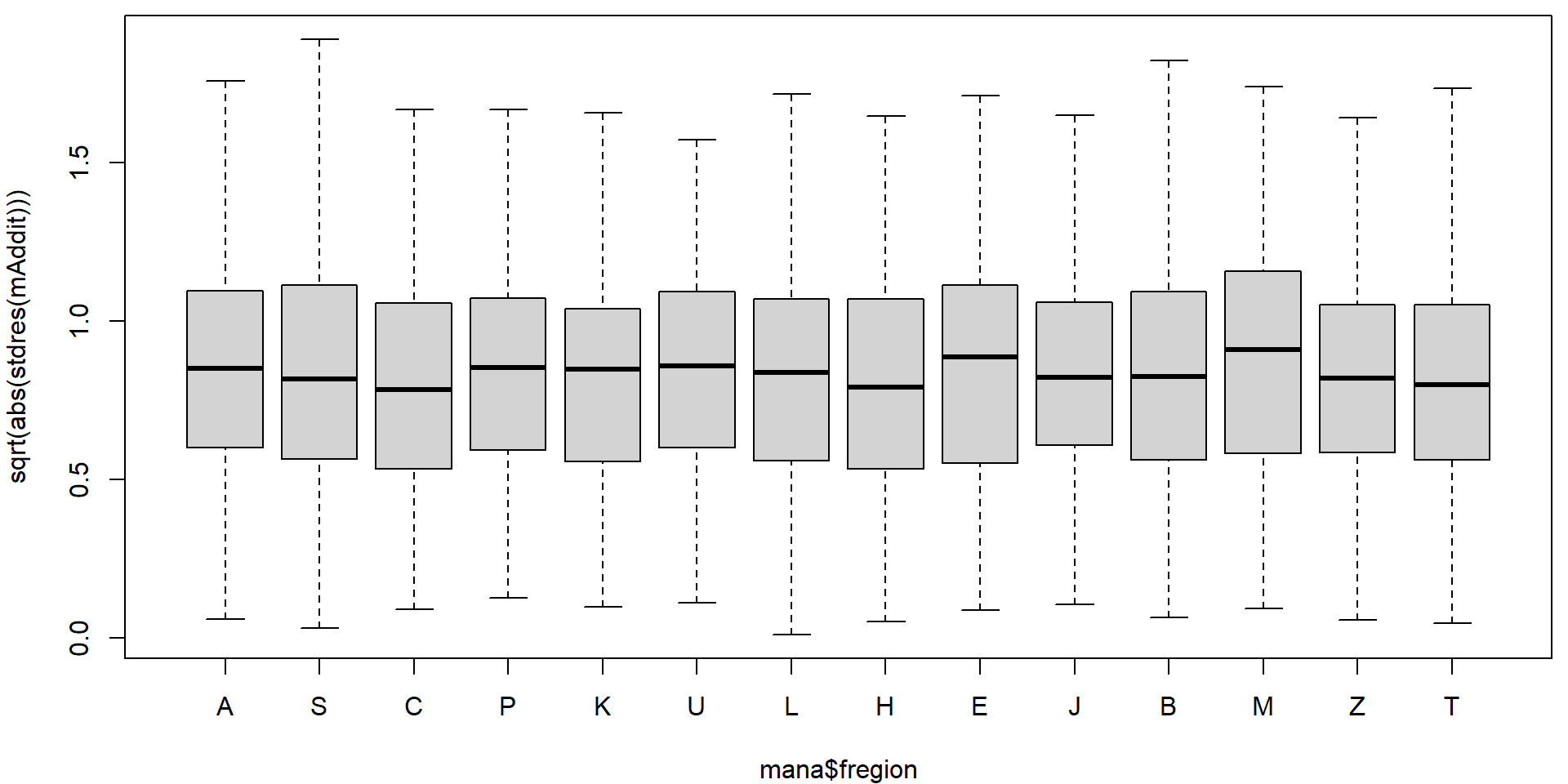

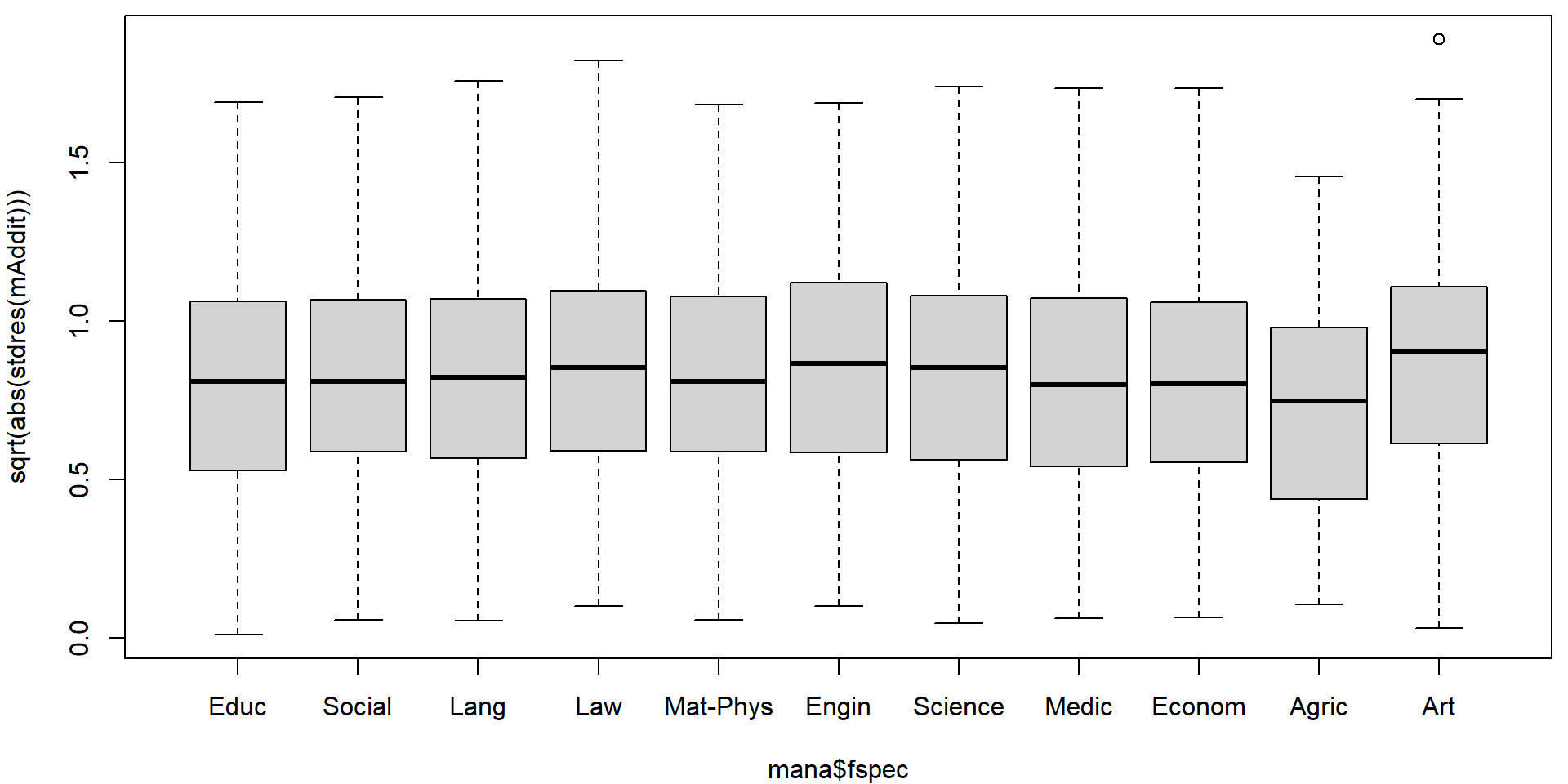

Could the variability vary with the region or school specialization?

par(mfrow = c(1,1), mar = c(4,4,0.5,0.5))

plot(sqrt(abs(stdres(mAddit))) ~ mana$fregion)

plot(sqrt(abs(stdres(mAddit))) ~ mana$fspec)

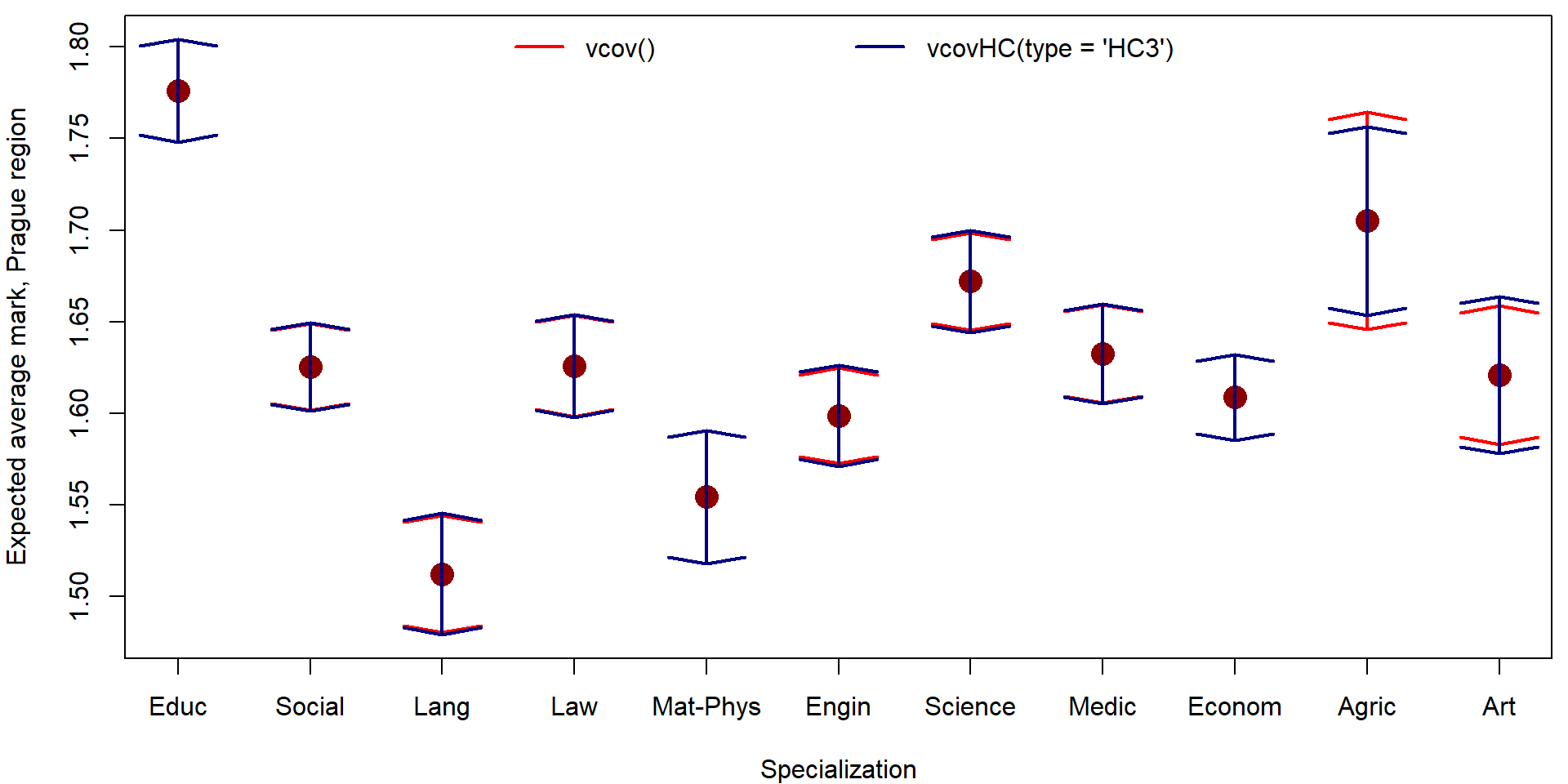

In the diagnostic plots, there are some obvious issues with heteroscedasticity. We will use the sandwich estimate of the variance matrix to account for this heteroscedasticity.

Generally, for the variance matrix of \(\widehat{\boldsymbol{\beta}}\) we have

\[

\mathsf{var} \left[ \left. \widehat{\boldsymbol{\beta}} \right|

\mathbb{X} \right]

=

\mathsf{var} \left[ \left. \left(\mathbb{X}^\top \mathbb{X}\right)^{-1}

\mathbb{X}^\top \mathbf{Y} \right| \mathbb{X} \right]

=

\left(\mathbb{X}^\top \mathbb{X}\right)^{-1} \mathbb{X}^\top

\mathsf{var} \left[ \left. \mathbf{Y} \right| \mathbb{X} \right]

\mathbb{X} \left(\mathbb{X}^\top \mathbb{X}\right)^{-1}.

\] Under homoscedasticity, \(\mathsf{var} \left[ \left. \mathbf{Y} \right|

\mathbb{X} \right] = \sigma^2 \mathbb{I}\) and the variance

estimator for \(\mathsf{var} \left[ \left.

\widehat{\boldsymbol{\beta}} \right| \mathbb{X} \right]\) is

\[

\widehat{\mathsf{var}} \left[ \left. \widehat{\boldsymbol{\beta}}

\right| \mathbb{X} \right]

=

\left(\mathbb{X}^\top \mathbb{X}\right)^{-1} \mathbb{X}^\top

\left[\widehat{\sigma}^2 \mathbb{I} \right]

\mathbb{X} \left(\mathbb{X}^\top \mathbb{X}\right)^{-1}

=

\widehat{\sigma}^2 \left(\mathbb{X}^\top \mathbb{X}\right)^{-1}.

\] Under heteroscedasticity, we consider

Heteroscedasticity-Consistent

covariance matrix estimator, which differs in the estimation of the

meat \(\mathsf{var} \left[ \left.

\mathbf{Y} \right| \mathbb{X} \right] = \mathsf{var} \left[ \left.

\boldsymbol{\varepsilon} \right| \mathbb{X} \right]\). Default

choice of type = "HC3" uses \[

\widehat{\mathsf{var}} \left[ \left. \mathbf{Y} \right| \mathbb{X}

\right]

=

\mathsf{diag} \left(U_i^2 / m_{ii}^2 \right),

\] where \(\mathbf{U} = \mathbf{Y} -

\widehat{\mathbf{Y}} = \mathbf{Y} - \mathbb{X} \left(\mathbb{X}^\top

\mathbb{X}\right)^{-1} \mathbb{X}^\top \mathbf{Y} = (\mathbb{I} -

\mathbb{H}) \mathbf{Y} = \mathbb{M} \mathbf{Y}\) are the

residuals.

library(sandwich)

### Estimate of the variance matrix of the regression coefficients

VHC <- vcovHC(mAddit, type = "HC3") # type changes the "meat"

VHC[1:5,1:5]## (Intercept) fregionS fregionC fregionP fregionK

## (Intercept) 0.0002036 -0.0001045 -0.0001066 -0.0001052 -0.0001035

## fregionS -0.0001045 0.0001943 0.0001021 0.0001023 0.0001012

## fregionC -0.0001066 0.0001021 0.0002078 0.0001022 0.0001006

## fregionP -0.0001052 0.0001023 0.0001022 0.0004862 0.0001011

## fregionK -0.0001035 0.0001012 0.0001006 0.0001011 0.0004262And we can compare the result with manually reconstructed estimate

X <- model.matrix(mAddit)

Bread <- solve(t(X) %*% X) %*% t(X)

Meat <- diag(residuals(mAddit)^2 / (1 - hatvalues(mAddit))^2)

(Bread %*% Meat %*% t(Bread))[1:5, 1:5]## (Intercept) fregionS fregionC fregionP fregionK

## (Intercept) 0.0002036 -0.0001045 -0.0001066 -0.0001052 -0.0001035

## fregionS -0.0001045 0.0001943 0.0001021 0.0001023 0.0001012

## fregionC -0.0001066 0.0001021 0.0002078 0.0001022 0.0001006

## fregionP -0.0001052 0.0001023 0.0001022 0.0004862 0.0001011

## fregionK -0.0001035 0.0001012 0.0001006 0.0001011 0.0004262all.equal(Bread %*% Meat %*% t(Bread), VHC)## [1] TRUEThis can be compared with the classical estimate of the variance covariance matrix - in the following, we only focus on some portion of the matrix multiplied by 10000.

10000*VHC[1:5, 1:5] ## (Intercept) fregionS fregionC fregionP fregionK

## (Intercept) 2.036 -1.045 -1.066 -1.052 -1.035

## fregionS -1.045 1.943 1.021 1.023 1.012

## fregionC -1.066 1.021 2.078 1.022 1.006

## fregionP -1.052 1.023 1.022 4.862 1.011

## fregionK -1.035 1.012 1.006 1.011 4.26210000*vcov(mAddit)[1:5, 1:5]## (Intercept) fregionS fregionC fregionP fregionK

## (Intercept) 2.0496 -1.0091 -0.9705 -0.9934 -0.9450

## fregionS -1.0091 1.7808 0.9401 0.9440 0.9342

## fregionC -0.9705 0.9401 2.1167 0.9437 0.9367

## fregionP -0.9934 0.9440 0.9437 4.8252 0.9394

## fregionK -0.9450 0.9342 0.9367 0.9394 4.4006Note, that some variance components are overestimated by the standard

estimate (function vcov()) and some others or

underestimated.

The inference then proceeds with the same point estimate \(\widehat{\boldsymbol{\beta}}\) but a

different vcovHC matrix instead of standard

vcov(). Classical summary table can be recalculated in the

following way:

coeftest(mAddit, vcov = VHC) # from library("lmtest")##

## t test of coefficients:

##

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 1.77616 0.01427 124.48 < 2e-16 ***

## fregionS 0.00887 0.01394 0.64 0.52456

## fregionC -0.01336 0.01442 -0.93 0.35401

## fregionP 0.02008 0.02205 0.91 0.36255

## fregionK -0.02198 0.02064 -1.06 0.28711

## fregionU 0.05678 0.02139 2.65 0.00797 **

## fregionL 0.02388 0.01410 1.69 0.09046 .

## fregionH 0.04861 0.02023 2.40 0.01631 *

## fregionE 0.07980 0.02170 3.68 0.00024 ***

## fregionJ -0.03138 0.01963 -1.60 0.10999

## fregionB 0.03236 0.01395 2.32 0.02042 *

## fregionM 0.02588 0.02425 1.07 0.28581

## fregionZ 0.01653 0.01835 0.90 0.36766

## fregionT 0.00846 0.01261 0.67 0.50237

## fspecSocial -0.15080 0.01287 -11.71 < 2e-16 ***

## fspecLang -0.26387 0.01688 -15.63 < 2e-16 ***

## fspecLaw -0.15020 0.01528 -9.83 < 2e-16 ***

## fspecMat-Phys -0.22192 0.01888 -11.75 < 2e-16 ***

## fspecEngin -0.17751 0.01420 -12.50 < 2e-16 ***

## fspecScience -0.10411 0.01437 -7.24 5.0e-13 ***

## fspecMedic -0.14368 0.01413 -10.17 < 2e-16 ***

## fspecEconom -0.16751 0.01282 -13.06 < 2e-16 ***

## fspecAgric -0.07115 0.02715 -2.62 0.00879 **

## fspecArt -0.15527 0.02229 -6.97 3.7e-12 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1Unfortunately, function predict.lm is not as flexible

and confidence intervals need to be computed manually

newdata <- data.frame(fregion = "A",

fspec = levels(mana$fspec),

avg.mark = 0)

# predict no sandwich

pred_vcov <- predict(mAddit, newdata = newdata, interval = "confidence")

# predict with sandwich (manually)

L <- model.matrix(mAddit, data = newdata)

se.fit <- sqrt(diag(L %*% VHC %*% t(L)))

pred_VHC <- matrix(NA, nrow = nrow(L), ncol = 3)

pred_VHC[,1] <- L %*% coef(mAddit)

pred_VHC[,2] <- pred_VHC[,1] - se.fit * qt(0.975, df = mAddit$df.residual)

pred_VHC[,3] <- pred_VHC[,1] + se.fit * qt(0.975, df = mAddit$df.residual)

colnames(pred_VHC) <- c("fit", "lwr", "upr")

rownames(pred_VHC) <- rownames(pred_vcov)

all.equal(pred_vcov[,"fit"], pred_VHC[,"fit"])## [1] TRUEall.equal(pred_vcov[,"lwr"], pred_VHC[,"lwr"])## [1] "Mean relative difference: 0.001029"all.equal(pred_vcov[,"upr"], pred_VHC[,"upr"])## [1] "Mean relative difference: 0.0009899"par(mfrow = c(1,1), mar = c(4,4,0.5,0.5))

plot(x = 1:nlevels(mana$fspec), y = pred_vcov[,"fit"],

xlab = "Specialization", ylab = "Expected average mark, Prague region",

xaxt = "n", ylim = range(pred_vcov, pred_VHC),

col = "red4", pch = 16, cex = 2)

axis(1, at = 1:nlevels(mana$fspec), labels = levels(mana$fspec))

arrows(x0 = 1:nlevels(mana$fspec), y0 = pred_vcov[,"fit"], y1 = pred_vcov[,"lwr"],

angle = 80, lwd = 2, col = "red")

arrows(x0 = 1:nlevels(mana$fspec), y0 = pred_vcov[,"fit"], y1 = pred_vcov[,"upr"],

angle = 80, lwd = 2, col = "red")

arrows(x0 = 1:nlevels(mana$fspec), y0 = pred_vcov[,"fit"], y1 = pred_VHC[,"lwr"],

angle = 80, lwd = 2, col = "navyblue")

arrows(x0 = 1:nlevels(mana$fspec), y0 = pred_vcov[,"fit"], y1 = pred_VHC[,"upr"],

angle = 80, lwd = 2, col = "navyblue")

legend("top", legend = c("vcov()", "vcovHC(type = 'HC3')"),

ncol = 2, bty = "n", col = c("red", "navyblue"), lwd = 2)