Interactions, transformations, hierarchy

Jan Vávra

Exercise 6

Download this R markdown as: R, Rmd.

Outline of the lab session

- Multiple regression model with interactions

- Transformations of the explanatory variables

- Hierarchically well formulated model vs. non-hierarchical model

Loading the data and libraries

For the purpose of this lab session we will consider the dataset

Dris from the R library mffSM. We will be

interested in the dependence of the yield on the amount of magnesium

concentration (yield ~ Mg) and the amount of the calcium

concentration (yield ~ Ca).

library("mffSM")

library("colorspace")

data(Dris, package = "mffSM")All together, there are 368 observations available in the dataset and 6 different measurements (although, we will not use all of them). A brief insight about the data can be taken from the following code:

dim(Dris)## [1] 368 6head(Dris)## yield N P K Ca Mg

## 1 5.47 470 47 320 47 11

## 2 5.63 530 48 357 60 16

## 3 5.63 530 48 310 63 16

## 4 4.84 482 47 357 47 13

## 5 4.84 506 48 294 52 15

## 6 4.21 500 45 283 61 14summary(Dris)## yield N P K Ca Mg

## Min. :1.92 Min. :268 Min. :32.0 Min. :200 Min. :29.0 Min. : 8.0

## 1st Qu.:4.04 1st Qu.:427 1st Qu.:43.0 1st Qu.:338 1st Qu.:41.8 1st Qu.:10.0

## Median :4.84 Median :473 Median :49.0 Median :377 Median :49.0 Median :11.0

## Mean :4.86 Mean :470 Mean :48.6 Mean :375 Mean :51.5 Mean :11.6

## 3rd Qu.:5.56 3rd Qu.:518 3rd Qu.:54.0 3rd Qu.:407 3rd Qu.:59.0 3rd Qu.:13.0

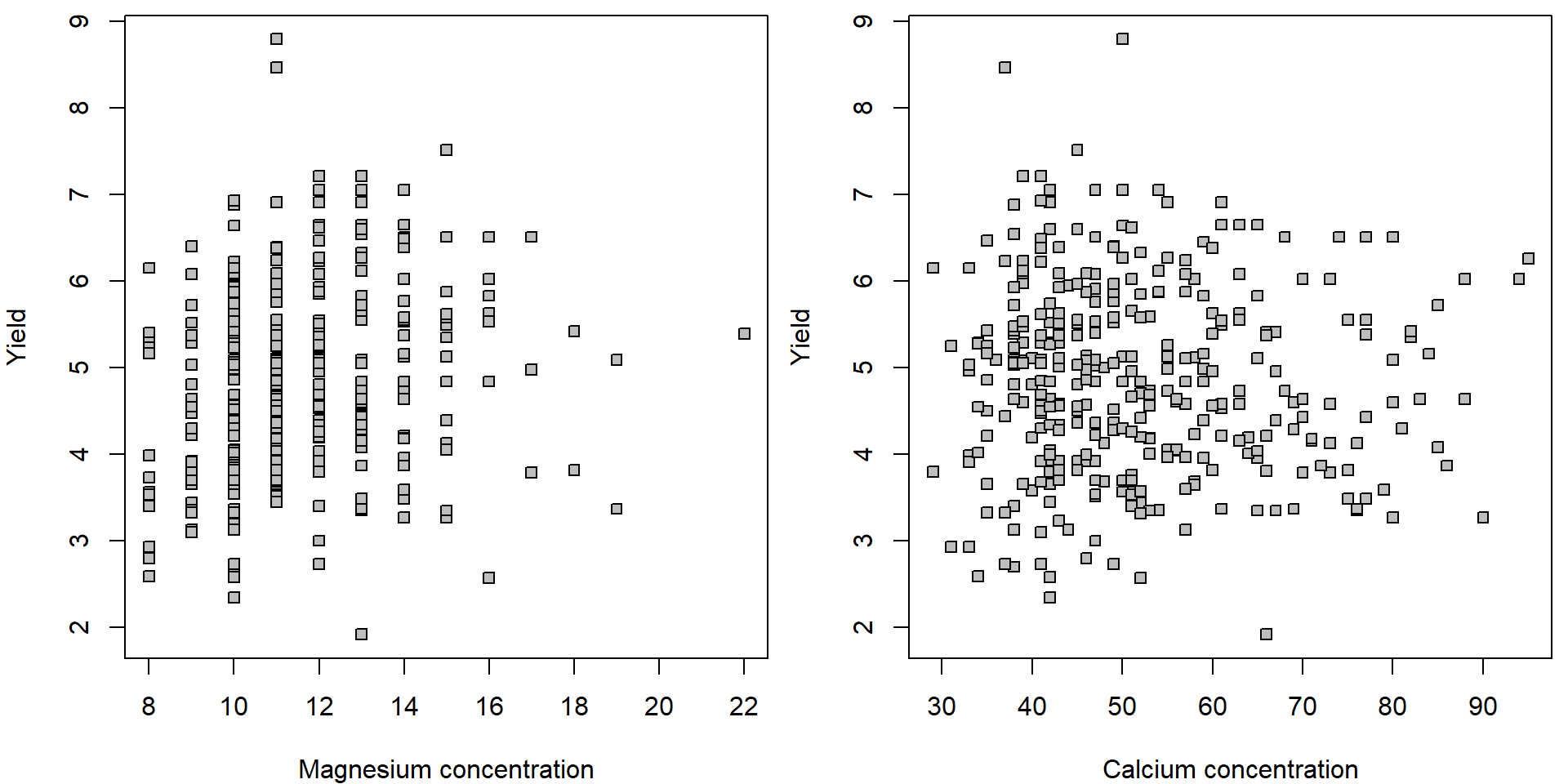

## Max. :8.79 Max. :657 Max. :72.0 Max. :580 Max. :95.0 Max. :22.0Firstly, we visualize marginal dependence of yield on the magnesium concentration and the calcium concentration.

par(mfrow = c(1,2), mar = c(4,4,0.5,0.5))

plot(yield ~ Mg, data = Dris, pch = 22, bg = "gray",

xlab = "Magnesium concentration", ylab = "Yield")

plot(yield ~ Ca, data = Dris, pch = 22, bg = "gray",

xlab = "Calcium concentration", ylab = "Yield")

Note, that the points are not equally distributed along the \(x\) axis. There are way more points at the left corners of the scatterplots than in the right parts. This suggests that a logarithmic transformation (of the magnesium and calcium concentration) could help to align the data more uniformly along the \(x\) axis,

For more intuitive interpretation we use the logarithm with the base

two (meaning that unit increase of the logaritmically transformed

covariates, i.e.,

\(\log_2(x) + 1 = \log_2 (x) \cdot \log_2 2 =

\log_2 (2 x)\) equals double amount of the original

covariate).

Dris <- transform(Dris,

lMg = log2(Mg), lMg2 = (log2(Mg))^2,

lCa = log2(Ca), lCa2 = (log2(Ca))^2,

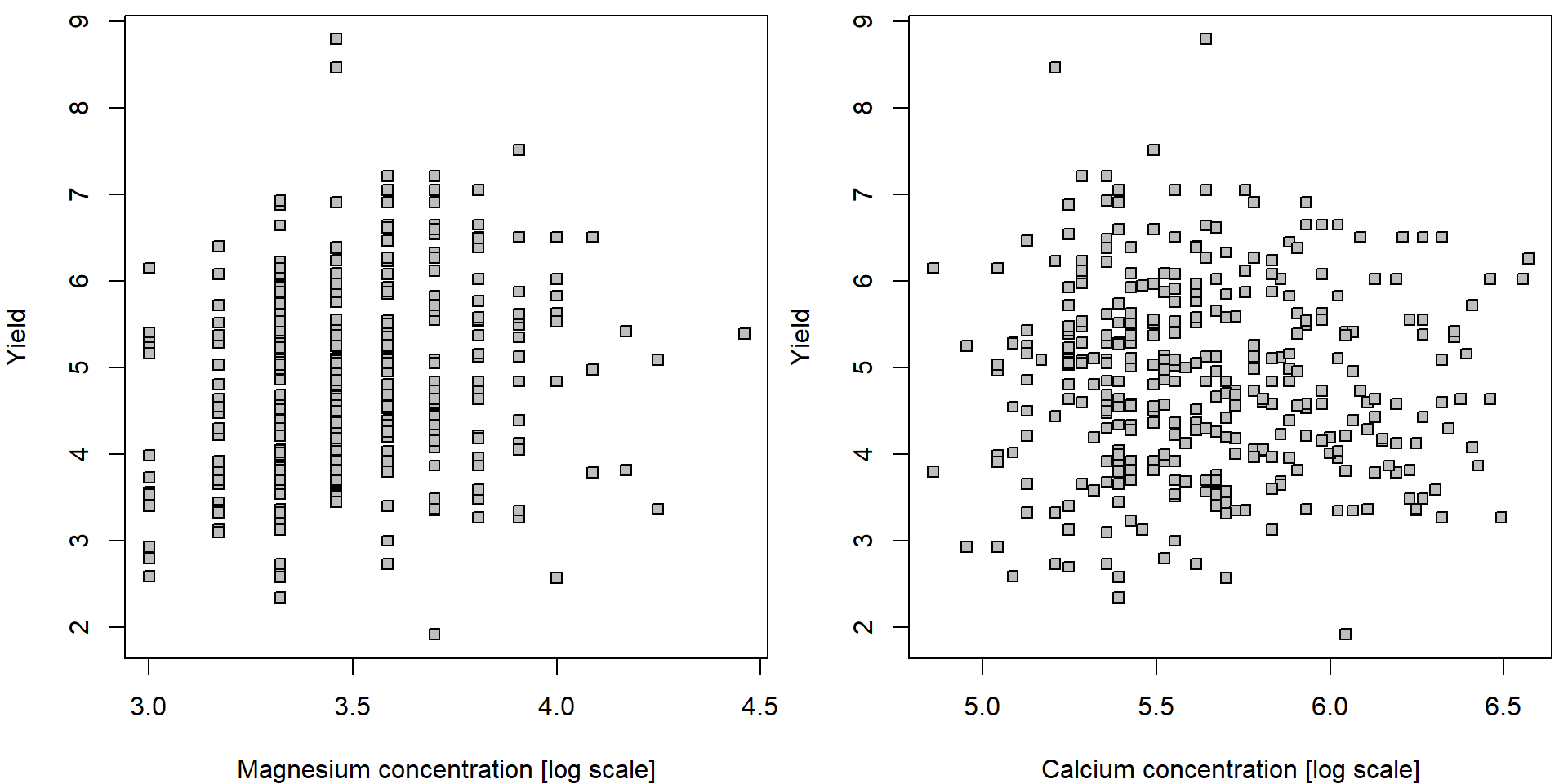

lN = log2(N), lN2=(log2(N))^2)The same scatterplots but x-axis in on logarithmic scale.

par(mfrow = c(1,2), mar = c(4,4,0.5,0.5))

plot(yield ~ lMg, data = Dris, pch = 22, bg = "gray",

xlab = "Magnesium concentration [log scale]", ylab = "Yield")

plot(yield ~ lCa, data = Dris, pch = 22, bg = "gray",

xlab = "Calcium concentration [log scale]", ylab = "Yield") Plotting functions in R also provide an option to turn an axis to the

log scale. It does not offer you the option to choose the base but

adjusts x-axis to keep the labels of original values. Now the labels on

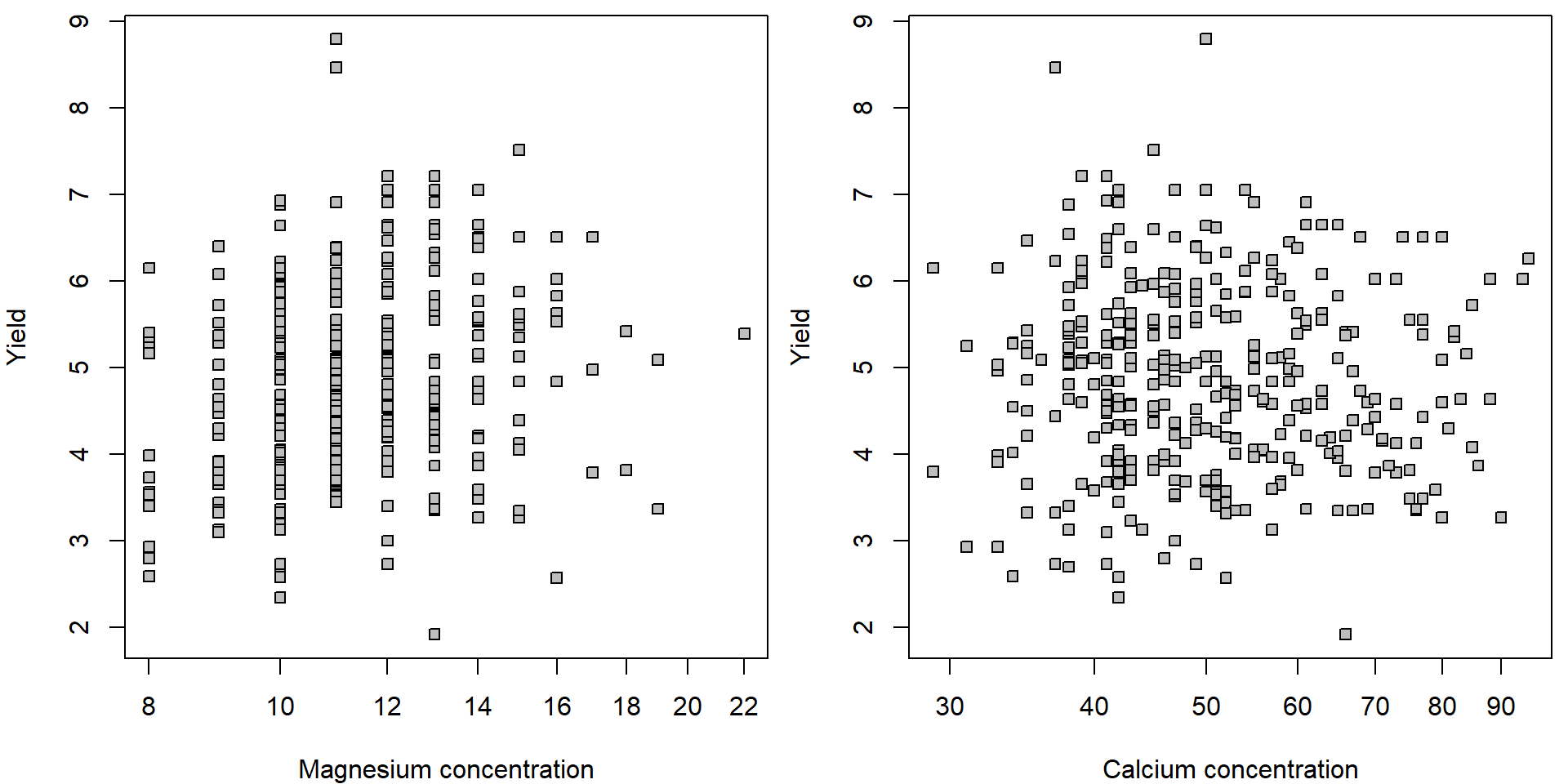

the x-axis are not equidistant:

Plotting functions in R also provide an option to turn an axis to the

log scale. It does not offer you the option to choose the base but

adjusts x-axis to keep the labels of original values. Now the labels on

the x-axis are not equidistant:

par(mfrow = c(1,2), mar = c(4,4,0.5,0.5))

plot(yield ~ Mg, data = Dris, pch = 22, bg = "gray", log = "x",

xlab = "Magnesium concentration", ylab = "Yield")

plot(yield ~ Ca, data = Dris, pch = 22, bg = "gray", log = "x",

xlab = "Calcium concentration", ylab = "Yield")

For the following, we will use the transformed data.

Individual work

- Visualize the marginal distributions of the original and the transformed variables. Comment on the skewness of the distribution.

- Use the techniques from previous exercises to reason the log-trend compared to the linear trend.

- For the log-transformed covariates suppress the x-axis labels

(

xaxt = "n") and add your own labels with original scale withaxis().

1. Simple and multiple linear regression models

Let us start with two simple regression models (one explanatory variable only) and we compare these models with a multiple regression model (with both explanatory variables being used at the same time).

summary(m10 <- lm(yield ~ lMg, data = Dris))##

## Call:

## lm(formula = yield ~ lMg, data = Dris)

##

## Residuals:

## Min 1Q Median 3Q Max

## -3.119 -0.741 -0.074 0.745 3.984

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 1.485 0.779 1.91 0.057 .

## lMg 0.961 0.221 4.35 1.8e-05 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 1.07 on 366 degrees of freedom

## Multiple R-squared: 0.0491, Adjusted R-squared: 0.0465

## F-statistic: 18.9 on 1 and 366 DF, p-value: 1.77e-05summary(m01 <- lm(yield ~ lCa, data = Dris))##

## Call:

## lm(formula = yield ~ lCa, data = Dris)

##

## Residuals:

## Min 1Q Median 3Q Max

## -2.902 -0.809 -0.039 0.721 3.928

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 5.456 0.912 5.98 5.2e-09 ***

## lCa -0.105 0.161 -0.65 0.52

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 1.1 on 366 degrees of freedom

## Multiple R-squared: 0.00115, Adjusted R-squared: -0.00158

## F-statistic: 0.423 on 1 and 366 DF, p-value: 0.516Both marginal models can be now compared with a larger model, containing both predictor variables additively:

summary(m11 <- lm(yield ~ lMg + lCa, data = Dris))##

## Call:

## lm(formula = yield ~ lMg + lCa, data = Dris)

##

## Residuals:

## Min 1Q Median 3Q Max

## -2.951 -0.721 -0.084 0.770 4.012

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 3.403 0.953 3.57 0.00040 ***

## lMg 1.400 0.253 5.53 6.1e-08 ***

## lCa -0.614 0.180 -3.40 0.00074 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 1.05 on 365 degrees of freedom

## Multiple R-squared: 0.0784, Adjusted R-squared: 0.0733

## F-statistic: 15.5 on 2 and 365 DF, p-value: 3.4e-07Note, there are quite different values for the estimated parameters when being interested in the effect of magnesium or the effect of calcium. Which model should be taken as a reference one?

Individual work

Interpret the models fitted above – try to explain the meaning of the estimated parameters. In particular, focus on the following:

- Interpret the estimated regression coefficients in the models.

- What is being tested on the rows started with ‘lMg’ and ‘lCa’?

- When comparing all three models, which one is, according your our opinion, the most suitable one?

Quadratic trend

In the following, we will try slightly more complex (multiple) regression models:

summary(m20 <- lm(yield ~ lMg + lMg2, data = Dris))##

## Call:

## lm(formula = yield ~ lMg + lMg2, data = Dris)

##

## Residuals:

## Min 1Q Median 3Q Max

## -3.194 -0.764 -0.041 0.809 3.896

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -18.752 7.628 -2.46 0.0144 *

## lMg 12.381 4.288 2.89 0.0041 **

## lMg2 -1.603 0.601 -2.67 0.0080 **

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 1.06 on 365 degrees of freedom

## Multiple R-squared: 0.0673, Adjusted R-squared: 0.0622

## F-statistic: 13.2 on 2 and 365 DF, p-value: 2.99e-06summary(m02 <- lm(yield ~ lCa + lCa2, data = Dris))##

## Call:

## lm(formula = yield ~ lCa + lCa2, data = Dris)

##

## Residuals:

## Min 1Q Median 3Q Max

## -2.908 -0.831 -0.049 0.695 3.889

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -4.611 12.796 -0.36 0.72

## lCa 3.434 4.490 0.76 0.44

## lCa2 -0.310 0.393 -0.79 0.43

##

## Residual standard error: 1.1 on 365 degrees of freedom

## Multiple R-squared: 0.00285, Adjusted R-squared: -0.00261

## F-statistic: 0.522 on 2 and 365 DF, p-value: 0.594Is there some statistical improvements in the two models above?

And what about the following models?

summary(m21 <- lm(yield ~ lMg + lMg2 + lCa, data = Dris)) ##

## Call:

## lm(formula = yield ~ lMg + lMg2 + lCa, data = Dris)

##

## Residuals:

## Min 1Q Median 3Q Max

## -3.026 -0.755 -0.107 0.747 3.923

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -16.937 7.535 -2.25 0.02519 *

## lMg 12.884 4.228 3.05 0.00248 **

## lMg2 -1.612 0.592 -2.72 0.00682 **

## lCa -0.616 0.179 -3.44 0.00064 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 1.05 on 364 degrees of freedom

## Multiple R-squared: 0.0967, Adjusted R-squared: 0.0893

## F-statistic: 13 on 3 and 364 DF, p-value: 4.41e-08summary(m22 <- lm(yield ~ lMg + lMg2 + lCa + lCa2, data = Dris)) ##

## Call:

## lm(formula = yield ~ lMg + lMg2 + lCa + lCa2, data = Dris)

##

## Residuals:

## Min 1Q Median 3Q Max

## -3.026 -0.758 -0.108 0.746 3.918

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -18.2667 13.2980 -1.37 0.1704

## lMg 12.7832 4.3142 2.96 0.0032 **

## lMg2 -1.5977 0.6042 -2.64 0.0085 **

## lCa -0.0858 4.3716 -0.02 0.9843

## lCa2 -0.0464 0.3821 -0.12 0.9035

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 1.05 on 363 degrees of freedom

## Multiple R-squared: 0.0968, Adjusted R-squared: 0.0868

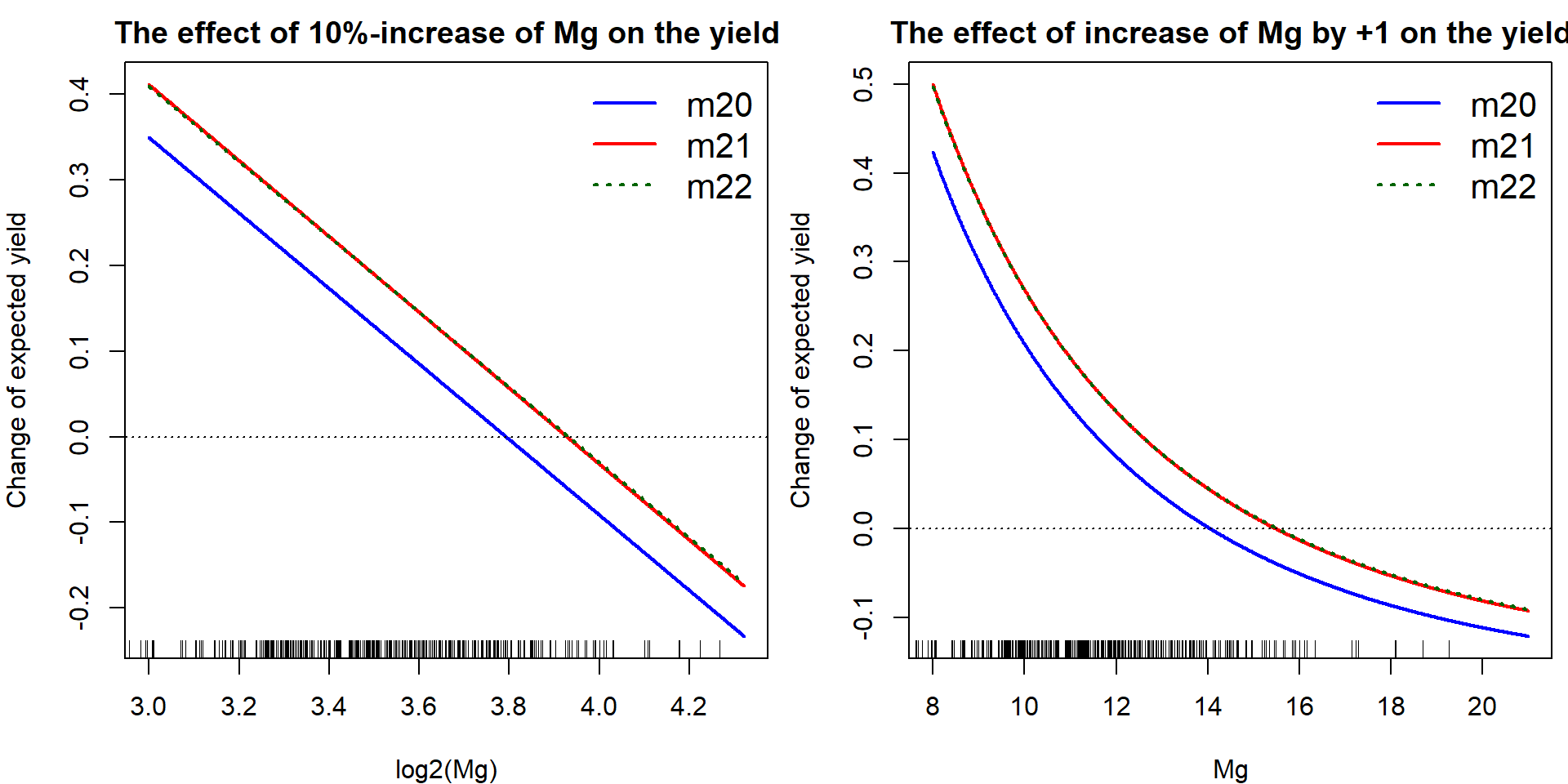

## F-statistic: 9.72 on 4 and 363 DF, p-value: 1.76e-07What is now the effect of the magnesium concentration on the expected (estimated) amount of yield? How this effect can be quantified using different models?

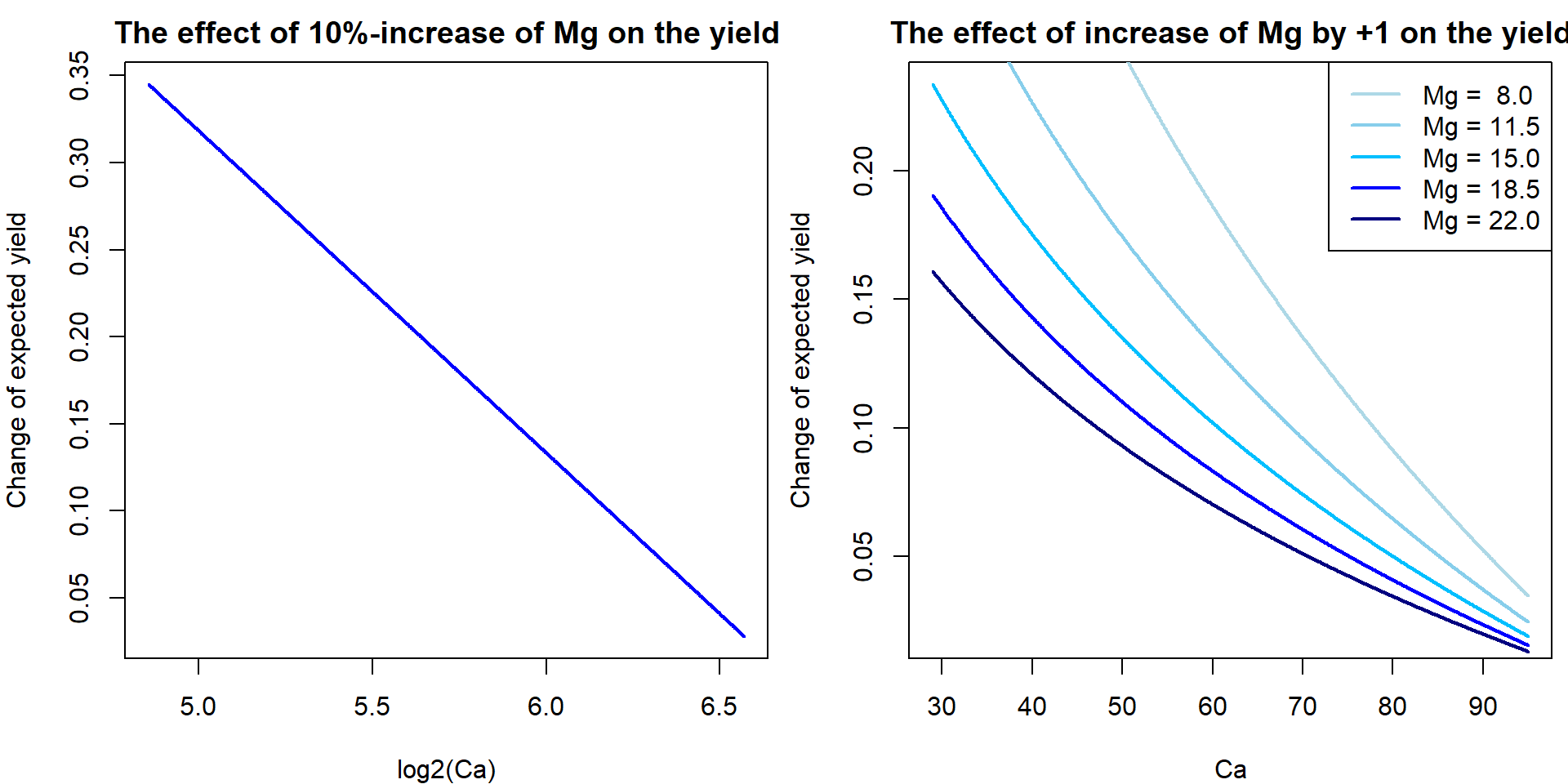

We can actually visualize the effect of the magnesium concentration on the yield (using different models fitted above). Specifically, we are interested in the effect of the change of lMg by additional log2(1.1) which corresponds with the increase of Mg by 10%). What will be the corresponding change of the expected amount of yield, i.e. \(\mathsf{E} [\mathtt{yield} | \mathtt{lMg}, \mathtt{lCa}]\)?

Dris <- transform(Dris, jMg = Mg + runif(nrow(Dris), -0.5, 0.5))

Dris <- transform(Dris, ljMg = log2(jMg))

eps <- 0.1

delta <- log2(1+eps)

lMg.grid <- seq(min(Dris$lMg), max(Dris$lMg)-delta, length = 1000)

effect.lMg <- function(b1,b2) b1*delta + b2*(2*delta*lMg.grid + delta^2)

YLIM <- range(effect.lMg(coef(m20)['lMg'], coef(m20)['lMg2']),

effect.lMg(coef(m21)['lMg'], coef(m21)['lMg2']),

effect.lMg(coef(m22)['lMg'], coef(m22)['lMg2']))

par(mfrow = c(1,2), mar = c(4,4,2,0.5))

plot(lMg.grid, effect.lMg(coef(m20)['lMg'], coef(m20)['lMg2']), type="l",

xlab = "log2(Mg)", ylab = "Change of expected yield",

main = "The effect of 10%-increase of Mg on the yield",

col = "blue", lwd = 2, ylim = YLIM)

lines(lMg.grid, effect.lMg(coef(m21)['lMg'], coef(m21)['lMg2']),

lwd=2, col="red")

lines(lMg.grid, effect.lMg(coef(m22)['lMg'], coef(m22)['lMg2']),

lwd=2, col="darkgreen", lty="dotted")

abline(h=0, lty="dotted")

legend("topright", legend=c("m20", "m21", "m22"), bty = "n",

col=c("blue", "red", "darkgreen"), lwd=c(2,2,2),

lty=c("solid", "solid", "dotted"), cex=1.3)

rug(Dris$ljMg)

Mg.grid <- seq(min(Dris$Mg), max(Dris$Mg)-1, length = 1000);

effect.Mg <- function(b1,b2){

return(b1*(log2(Mg.grid+1) - log2(Mg.grid)) +

b2*((log2(1+Mg.grid))^2 - (log2(Mg.grid))^2))

}

YLIM <- range(effect.Mg(coef(m20)['lMg'], coef(m20)['lMg2']),

effect.Mg(coef(m21)['lMg'], coef(m21)['lMg2']),

effect.Mg(coef(m22)['lMg'], coef(m22)['lMg2']))

plot(Mg.grid, effect.Mg(coef(m20)['lMg'], coef(m20)['lMg2']), type="l",

xlab = "Mg", ylab = "Change of expected yield",

main = "The effect of increase of Mg by +1 on the yield",

col = "blue", lwd=2, ylim=YLIM)

lines(Mg.grid, effect.Mg(coef(m21)['lMg'], coef(m21)['lMg2']),

lwd=2, col="red")

lines(Mg.grid, effect.Mg(coef(m22)['lMg'], coef(m22)['lMg2']),

lwd=2, col="darkgreen", lty="dotted")

abline(h=0, lty="dotted")

legend("topright", legend=c("m20", "m21", "m22"), bty = "n",

col=c("blue", "red", "darkgreen"), lwd=c(2,2,2),

lty=c("solid", "solid", "dotted"), cex=1.3)

rug(Dris$jMg)

Individual work

- Considering the models above, how would you (statistically) answer a question whether given the information about the magnesium (‘Mg’) the amount of yield is independent of the concentration of the calcium?

- How would you test that given Mg (with the quadratic dependence) the amount of ‘yield’ is independent of Ca?

- Can we say that the amount of yield is independent of Ca?

- Can we say that given Mg and Ca the amount of yield is independent of N?

2. Regression models with simple interations

The interactions in the model allow for modelling a flexible effect

of some covariate given the value of some other covariate (or multiple

covariates). We consider a simple model with one interaction term

(denoted as lMg:lCa):

summary(m1_inter <- lm(yield ~ lMg + lCa + lMg:lCa, data = Dris))##

## Call:

## lm(formula = yield ~ lMg + lCa + lMg:lCa, data = Dris)

##

## Residuals:

## Min 1Q Median 3Q Max

## -2.983 -0.733 -0.128 0.780 3.953

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -23.653 11.878 -1.99 0.0472 *

## lMg 9.046 3.356 2.70 0.0073 **

## lCa 4.159 2.097 1.98 0.0480 *

## lMg:lCa -1.346 0.589 -2.29 0.0229 *

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 1.05 on 364 degrees of freedom

## Multiple R-squared: 0.0914, Adjusted R-squared: 0.0839

## F-statistic: 12.2 on 3 and 364 DF, p-value: 1.25e-07- What is the interpretation of the estimated parameters now?

- What is the effect of the magnesium concentration and the calcium concentration on the amount of yield?

- Compare the interaction model above with the model below (multiple model with three explanatory variables, where the last variable \(Z\) is created artificially from the magnesium concentration and the calcium concentration).

Dris$Z <- Dris$lMg * Dris$lCa

summary(m1_Z <- lm(yield ~ lMg + lCa + Z, data = Dris))##

## Call:

## lm(formula = yield ~ lMg + lCa + Z, data = Dris)

##

## Residuals:

## Min 1Q Median 3Q Max

## -2.983 -0.733 -0.128 0.780 3.953

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -23.653 11.878 -1.99 0.0472 *

## lMg 9.046 3.356 2.70 0.0073 **

## lCa 4.159 2.097 1.98 0.0480 *

## Z -1.346 0.589 -2.29 0.0229 *

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 1.05 on 364 degrees of freedom

## Multiple R-squared: 0.0914, Adjusted R-squared: 0.0839

## F-statistic: 12.2 on 3 and 364 DF, p-value: 1.25e-07Again, we can quantify the effect of the magnesium concentration on the yield however, this time we have to properly take into account the underlying concentration of calcium. How can be this done?

Mg.grid <- seq(min(Dris$Mg), max(Dris$Mg), length = 5)

blues <- c("lightblue", "skyblue", "deepskyblue", "blue", "navyblue")

Ca.grid <- seq(min(Dris$Ca), max(Dris$Ca), length = 1000)

lCa.grid <- seq(min(Dris$lCa), max(Dris$lCa), length = 1000)

eps <- 0.1;

delta <- log2(1+eps)

par(mfrow = c(1, 2), mar = c(4,4,2,0.5))

plot(lCa.grid,

delta*coef(m1_inter)['lMg'] + delta*coef(m1_inter)['lMg:lCa'] * lCa.grid,

xlab = "log2(Ca)", ylab = "Change of expected yield",

main = "The effect of 10%-increase of Mg on the yield",

col = "blue", type="l", lwd=2)

plot(Ca.grid,

coef(m1_inter)['lMg'] * (log2(Mg.grid[3]+1)-log2(Mg.grid[3])) +

coef(m1_inter)['lMg:lCa'] * (log2(Mg.grid[3]+1)-log2(Mg.grid[3])) * log2(Ca.grid),

xlab = "Ca", ylab = "Change of expected yield",

main = "The effect of increase of Mg by +1 on the yield",

type="n")

for(mg in 1:5){

lines(Ca.grid,

coef(m1_inter)['lMg'] * (log2(Mg.grid[mg]+1)-log2(Mg.grid[mg])) +

coef(m1_inter)['lMg:lCa'] * (log2(Mg.grid[mg]+1)-log2(Mg.grid[mg])) * log2(Ca.grid),

col = blues[mg], lty=1, lwd=2)

}

legend("topright", paste0("Mg = ", format(Mg.grid, digits = 3)),

col = blues, lty = 1, lwd = 2)

3. Interations with categorical covariates

Let us return to the dataset from Exercise 2:

peat <- read.csv("http://www.karlin.mff.cuni.cz/~vavraj/nmfm334/data/peat.csv",

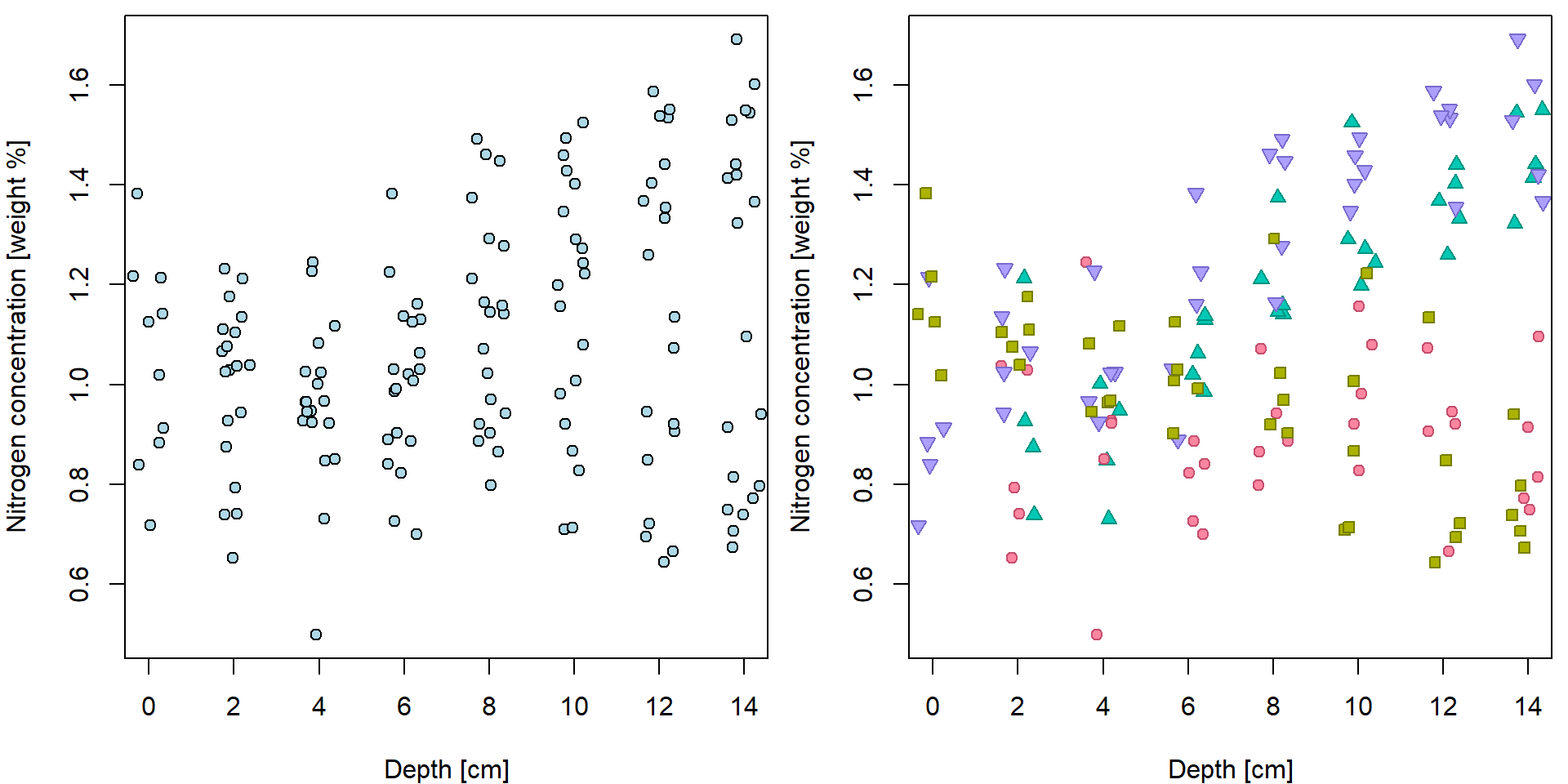

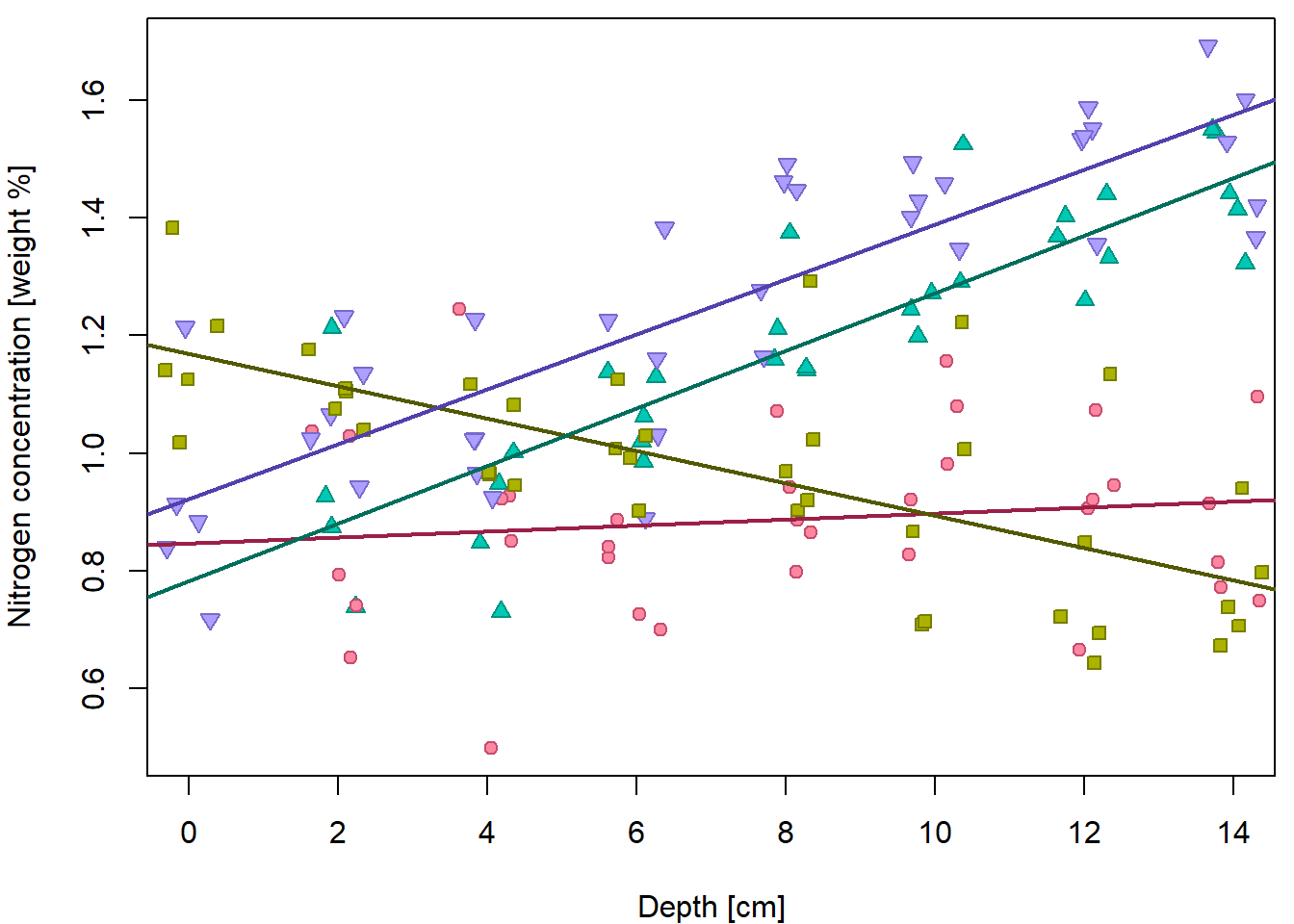

header = TRUE, stringsAsFactors = TRUE)We have tried to explore the relationship between Nitrogen concentration and depth:

XLAB <- "Depth [cm]"

YLAB <- "Nitrogen concentration [weight %]"

XLIM <- range(peat[, "depth"])

YLIM <- range(peat[, "N"])

PCH <- c(21, 22, 24, 25)

DARK <- rainbow_hcl(4, c = 80, l = 35)

COL <- rainbow_hcl(4, c = 80, l = 50)

BGC <- rainbow_hcl(4, c = 80, l = 70)

names(PCH) <- names(COL) <- names(BGC) <- levels(peat[, "group"])

par(mfrow = c(1,2), mar = c(4,4,0.5,0.5))

plot(N ~ jitter(depth), data = peat, pch = 21, bg = "lightblue",

xlab = XLAB, ylab = YLAB, xlim = XLIM, ylim = YLIM)

plot(N ~ jitter(depth), data = peat,

pch = PCH[group], col = COL[group], bg = BGC[group],

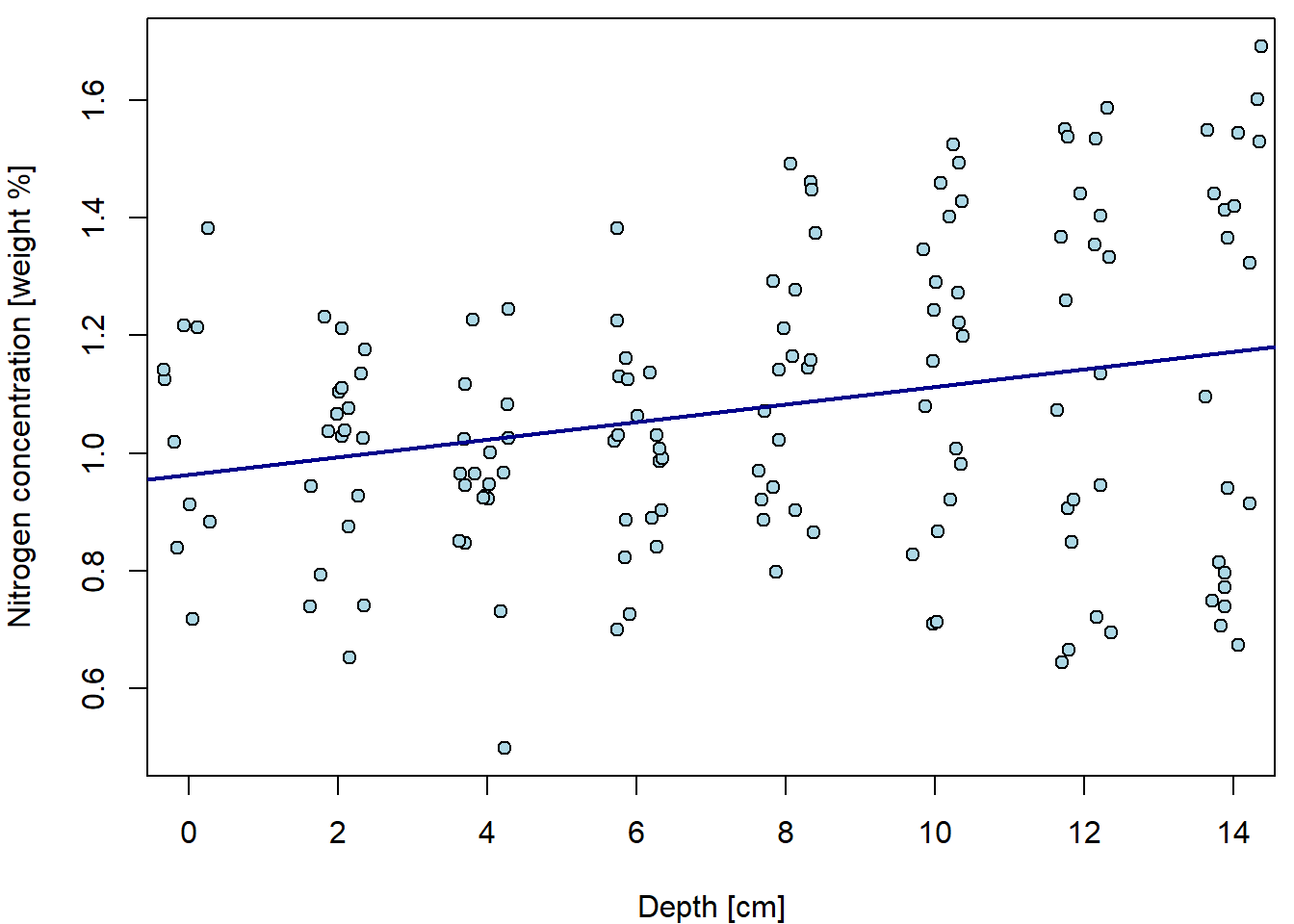

xlab = XLAB, ylab = YLAB, xlim = XLIM, ylim = YLIM) When we do not distinguish groups, we barely see any trend. Fitted line

is only very slowly increasing.

When we do not distinguish groups, we barely see any trend. Fitted line

is only very slowly increasing.

fit1 <- lm(N ~ depth, data = peat)

summary(fit1)##

## Call:

## lm(formula = N ~ depth, data = peat)

##

## Residuals:

## Min 1Q Median 3Q Max

## -0.5250 -0.1775 -0.0043 0.1905 0.5186

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 0.96394 0.04060 23.7 <2e-16 ***

## depth 0.01497 0.00467 3.2 0.0017 **

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 0.247 on 146 degrees of freedom

## Multiple R-squared: 0.0657, Adjusted R-squared: 0.0593

## F-statistic: 10.3 on 1 and 146 DF, p-value: 0.00166par(mfrow = c(1,1), mar = c(4,4,0.5,0.5))

plot(N ~ jitter(depth), data = peat, pch = 21, bg = "lightblue",

xlab = XLAB, ylab = YLAB, xlim = XLIM, ylim = YLIM)

abline(fit1, col = "darkblue", lwd = 2)

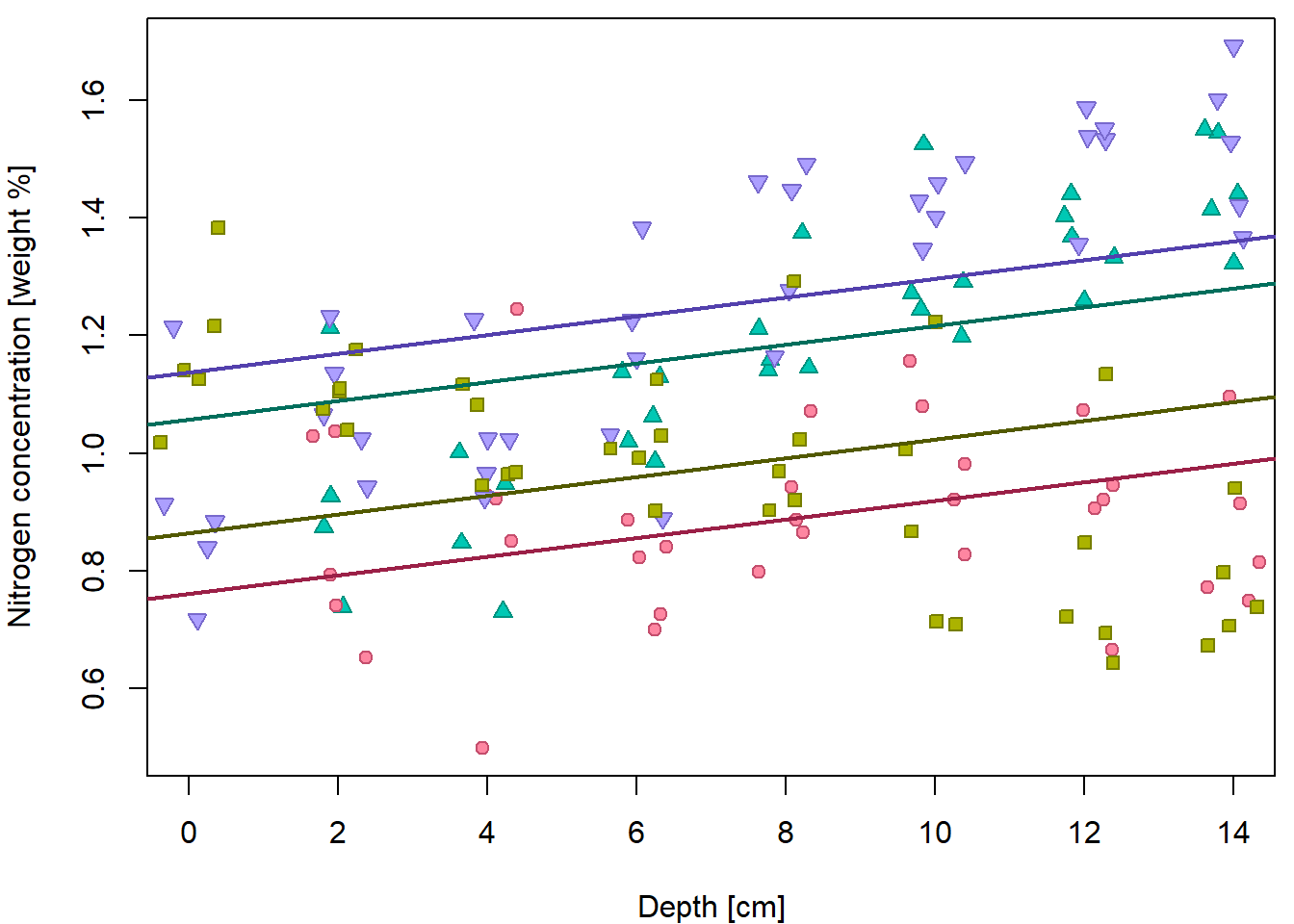

In order to fit line for each group we had to create a subset and estimate line for each subset. We would like to avoid this practice and fit that with one model.

However, additive model is not enough. Remember, additive model leads to parallel lines!

fit2 <- lm(N ~ depth + group, data = peat)

summary(fit2)##

## Call:

## lm(formula = N ~ depth + group, data = peat)

##

## Residuals:

## Min 1Q Median 3Q Max

## -0.4206 -0.1416 0.0143 0.1541 0.5177

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 0.76028 0.04494 16.92 < 2e-16 ***

## depth 0.01588 0.00377 4.22 4.4e-05 ***

## groupCB-VJJ 0.10475 0.04582 2.29 0.024 *

## groupVJJ 0.29753 0.04789 6.21 5.4e-09 ***

## groupVJJ-CB 0.37788 0.04582 8.25 9.6e-14 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 0.197 on 143 degrees of freedom

## Multiple R-squared: 0.416, Adjusted R-squared: 0.4

## F-statistic: 25.5 on 4 and 143 DF, p-value: 6.14e-16par(mfrow = c(1,1), mar = c(4,4,0.5,0.5))

plot(N ~ jitter(depth), data = peat,

pch = PCH[group], col = COL[group], bg = BGC[group],

xlab = XLAB, ylab = YLAB, xlim = XLIM, ylim = YLIM)

intercepts <- coef(fit2)[1] + c(0, coef(fit2)[3:5])

slope <- coef(fit2)[2]

for(g in 1:4){

abline(a = intercepts[g], b = slope, col = DARK[g], lwd = 2)

} This does not fit the data well. We need specific slopes for each group.

And this is where interactions play the key role.

This does not fit the data well. We need specific slopes for each group.

And this is where interactions play the key role.

An interaction term depth * group in this case leads to

the following model: \[\begin{multline}

\mathsf{E} \left[N | D=d, G=g\right] =

\beta_1

+ \beta_2 d

+ \beta_3 \mathbf{1}(g=\mathtt{CB-VJJ})

+ \beta_4 \mathbf{1}(g=\mathtt{VJJ})

+ \beta_5 \mathbf{1}(g=\mathtt{VJJ-CB})

+ \\

+ \beta_6 d \mathbf{1}(g=\mathtt{CB-VJJ})

+ \beta_7 d \mathbf{1}(g=\mathtt{VJJ})

+ \beta_8 d \mathbf{1}(g=\mathtt{VJJ-CB})

\end{multline}\] Rewriting it for groups separately we have:

\[

\begin{aligned}

\mathsf{E} \left[N | D=d, G=\mathtt{CB}\right]

&= \beta_1 + \beta_2 d

\\

\mathsf{E} \left[N | D=d, G=\mathtt{CB-VJJ}\right]

&= (\beta_1 + \beta_3) + (\beta_2 + \beta_6) d

\\

\mathsf{E} \left[N | D=d, G=\mathtt{VJJ}\right]

&= (\beta_1 + \beta_4) + (\beta_2 + \beta_7) d

\\

\mathsf{E} \left[N | D=d, G=\mathtt{VJJ-CB}\right]

&= (\beta_1 + \beta_5) + (\beta_2 + \beta_8) d

\\

\end{aligned}

\]

fit3 <- lm(N ~ depth * group, data = peat)

summary(fit3)##

## Call:

## lm(formula = N ~ depth * group, data = peat)

##

## Residuals:

## Min 1Q Median 3Q Max

## -0.3683 -0.0847 -0.0079 0.0611 0.3771

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 0.84696 0.05119 16.54 < 2e-16 ***

## depth 0.00505 0.00572 0.88 0.38

## groupCB-VJJ 0.32211 0.06442 5.00 1.7e-06 ***

## groupVJJ -0.06404 0.07537 -0.85 0.40

## groupVJJ-CB 0.07591 0.06442 1.18 0.24

## depth:groupCB-VJJ -0.03260 0.00739 -4.41 2.0e-05 ***

## depth:groupVJJ 0.04394 0.00831 5.29 4.7e-07 ***

## depth:groupVJJ-CB 0.04159 0.00739 5.63 9.6e-08 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 0.135 on 140 degrees of freedom

## Multiple R-squared: 0.731, Adjusted R-squared: 0.717

## F-statistic: 54.2 on 7 and 140 DF, p-value: <2e-16par(mfrow = c(1,1), mar = c(4,4,0.5,0.5))

plot(N ~ jitter(depth), data = peat,

pch = PCH[group], col = COL[group], bg = BGC[group],

xlab = XLAB, ylab = YLAB, xlim = XLIM, ylim = YLIM)

intercepts <- coef(fit3)[1] + c(0, coef(fit3)[3:5])

slopes <- coef(fit3)[2] + c(0, coef(fit3)[6:8])

for(g in 1:4){

abline(a = intercepts[g], b = slopes[g], col = DARK[g], lwd = 2)

}

Individual work

- How would you express interaction coefficients in terms of expected values? How would you interpret them?

- What is the difference between the two approaches: a) fit line for each subset of data, b) interaction model in terms of residual variance?

4. Complex model with many regressors and interactions

Now, we will consider another dataset from the mffSM

packages.

Data are provided from the American Association of University Professors

(AAUP) on annual faculty salary survey of American colleges and

universities, 1995.

data(AAUP, package = "mffSM")

dim(AAUP)## [1] 1161 17head(AAUP)## FICE name state type salary.prof salary.assoc salary.assist salary compens.prof

## 1 1061 Alaska Pacific University AK IIB 454 382 362 382 567

## 2 1063 Univ.Alaska-Fairbanks AK I 686 560 432 508 914

## 3 1065 Univ.Alaska-Southeast AK IIA 533 494 329 415 716

## 4 11462 Univ.Alaska-Anchorage AK IIA 612 507 414 498 825

## 5 1002 Alabama Agri.&Mech. Univ. AL IIA 442 369 310 350 530

## 6 1004 University of Montevallo AL IIA 441 385 310 388 542

## compens.assoc compens.assist compens n.prof n.assoc n.assist n.instruct n.faculty

## 1 485 471 487 6 11 9 4 32

## 2 753 572 677 74 125 118 40 404

## 3 663 442 559 9 26 20 9 70

## 4 681 557 670 115 124 101 21 392

## 5 444 376 423 59 77 102 24 262

## 6 473 383 477 57 33 35 2 127summary(AAUP)## FICE name state type salary.prof salary.assoc salary.assist

## Min. : 1002 Length:1161 PA : 85 I :180 Min. : 270 Min. :234 Min. :199

## 1st Qu.: 1903 Class :character NY : 81 IIA:363 1st Qu.: 440 1st Qu.:367 1st Qu.:313

## Median : 2668 Mode :character CA : 54 IIB:618 Median : 506 Median :413 Median :349

## Mean : 3052 TX : 54 Mean : 524 Mean :416 Mean :352

## 3rd Qu.: 3420 OH : 53 3rd Qu.: 600 3rd Qu.:461 3rd Qu.:388

## Max. :29269 IL : 50 Max. :1009 Max. :733 Max. :576

## (Other):784 NA's :68 NA's :36 NA's :24

## salary compens.prof compens.assoc compens.assist compens n.prof n.assoc

## Min. :232 Min. : 319 Min. :292 Min. :246 Min. : 265 Min. : 0.0 Min. : 0.0

## 1st Qu.:352 1st Qu.: 547 1st Qu.:456 1st Qu.:389 1st Qu.: 436 1st Qu.: 18.0 1st Qu.: 19.0

## Median :407 Median : 635 Median :519 Median :437 Median : 510 Median : 40.0 Median : 38.0

## Mean :420 Mean : 654 Mean :524 Mean :442 Mean : 527 Mean : 95.1 Mean : 72.4

## 3rd Qu.:475 3rd Qu.: 753 3rd Qu.:583 3rd Qu.:493 3rd Qu.: 600 3rd Qu.:105.0 3rd Qu.: 89.0

## Max. :866 Max. :1236 Max. :909 Max. :717 Max. :1075 Max. :997.0 Max. :721.0

## NA's :68 NA's :36 NA's :24

## n.assist n.instruct n.faculty

## Min. : 0.0 Min. : 0.0 Min. : 7

## 1st Qu.: 21.0 1st Qu.: 2.0 1st Qu.: 68

## Median : 40.0 Median : 6.0 Median : 132

## Mean : 68.6 Mean : 12.7 Mean : 257

## 3rd Qu.: 92.0 3rd Qu.: 14.0 3rd Qu.: 323

## Max. :510.0 Max. :178.0 Max. :2261

## ### A data subset containing only relevant variables

aaup <- subset(AAUP, select = c("FICE", "name", "state", "type", "n.prof",

"n.assoc", "n.assist", "salary.assoc"))

dim(aaup)## [1] 1161 8head(aaup)## FICE name state type n.prof n.assoc n.assist salary.assoc

## 1 1061 Alaska Pacific University AK IIB 6 11 9 382

## 2 1063 Univ.Alaska-Fairbanks AK I 74 125 118 560

## 3 1065 Univ.Alaska-Southeast AK IIA 9 26 20 494

## 4 11462 Univ.Alaska-Anchorage AK IIA 115 124 101 507

## 5 1002 Alabama Agri.&Mech. Univ. AL IIA 59 77 102 369

## 6 1004 University of Montevallo AL IIA 57 33 35 385summary(aaup)## FICE name state type n.prof n.assoc n.assist

## Min. : 1002 Length:1161 PA : 85 I :180 Min. : 0.0 Min. : 0.0 Min. : 0.0

## 1st Qu.: 1903 Class :character NY : 81 IIA:363 1st Qu.: 18.0 1st Qu.: 19.0 1st Qu.: 21.0

## Median : 2668 Mode :character CA : 54 IIB:618 Median : 40.0 Median : 38.0 Median : 40.0

## Mean : 3052 TX : 54 Mean : 95.1 Mean : 72.4 Mean : 68.6

## 3rd Qu.: 3420 OH : 53 3rd Qu.:105.0 3rd Qu.: 89.0 3rd Qu.: 92.0

## Max. :29269 IL : 50 Max. :997.0 Max. :721.0 Max. :510.0

## (Other):784

## salary.assoc

## Min. :234

## 1st Qu.:367

## Median :413

## Mean :416

## 3rd Qu.:461

## Max. :733

## NA's :36### considering only a subset of covariates

Data <- subset(aaup,

complete.cases(aaup[, c("salary.assoc", "n.prof", "n.assoc", "n.assist", "type")]))Individual work

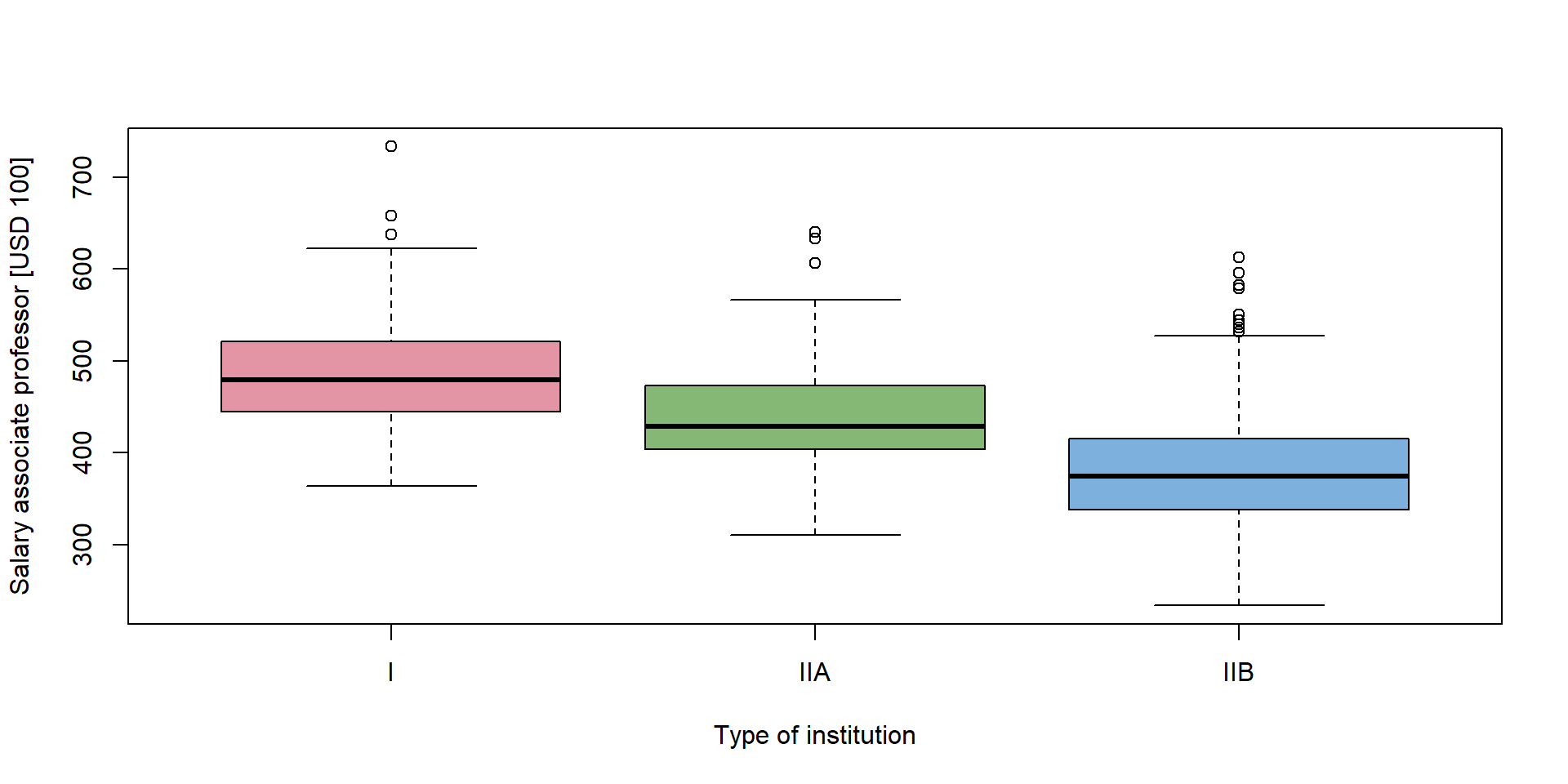

- Consider the dataset on annual income of university professors in USA and perform simple exploratory analysis.

- Focus on average yearly salaries for different types of the universities.

- In our exploratory analysis try to take into account also an information about different numbers of university professors (of different ranks) at the given university.

COL3 <- rainbow_hcl(3)

plot(salary.assoc ~ type, data = aaup,

xlab = "Type of institution", ylab = "Salary associate professor [USD 100]",

col = COL3)

We will fit a few different models that we will compare later. Firstly, a simple additive model:

summary(m1orig <- lm(salary.assoc ~ type + n.prof + n.assoc + n.assist, data = Data))##

## Call:

## lm(formula = salary.assoc ~ type + n.prof + n.assoc + n.assist,

## data = Data)

##

## Residuals:

## Min 1Q Median 3Q Max

## -144.66 -39.68 -6.89 33.56 266.64

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 448.4968 8.6185 52.04 < 2e-16 ***

## typeIIA -19.4546 7.1898 -2.71 0.00692 **

## typeIIB -70.5694 8.2073 -8.60 < 2e-16 ***

## n.prof 0.1037 0.0276 3.76 0.00018 ***

## n.assoc 0.0707 0.0588 1.20 0.22940

## n.assist -0.0661 0.0645 -1.03 0.30538

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 58.2 on 1119 degrees of freedom

## Multiple R-squared: 0.341, Adjusted R-squared: 0.338

## F-statistic: 116 on 5 and 1119 DF, p-value: <2e-16Interpret the estimated parameters in the model and compare it with the interpretation of the estimated parameters in the following model:

Data <- transform(Data, n.prof40 = n.prof - 40,

n.assoc40 = n.assoc - 40,

n.assist40 = n.assist - 40)

summary(m1 <- lm(salary.assoc ~ type + n.prof40 + n.assoc40 + n.assist40, data = Data))##

## Call:

## lm(formula = salary.assoc ~ type + n.prof40 + n.assoc40 + n.assist40,

## data = Data)

##

## Residuals:

## Min 1Q Median 3Q Max

## -144.66 -39.68 -6.89 33.56 266.64

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 452.8310 7.5027 60.36 < 2e-16 ***

## typeIIA -19.4546 7.1898 -2.71 0.00692 **

## typeIIB -70.5694 8.2073 -8.60 < 2e-16 ***

## n.prof40 0.1037 0.0276 3.76 0.00018 ***

## n.assoc40 0.0707 0.0588 1.20 0.22940

## n.assist40 -0.0661 0.0645 -1.03 0.30538

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 58.2 on 1119 degrees of freedom

## Multiple R-squared: 0.341, Adjusted R-squared: 0.338

## F-statistic: 116 on 5 and 1119 DF, p-value: <2e-16And, once more the same model, however, with a different

parametrization (contr.sum) used for the categorical

covariate of the university type:

m1B <- lm(salary.assoc ~ type +n.prof40 + n.assoc40 + n.assist40, data = Data,

contrasts = list(type = "contr.sum"))Finally, one more complicated model with interactions (in two different parametrization of the continuous covariates):

summary(m2orig <- lm(salary.assoc ~ type + n.prof*n.assoc + n.assist, data = Data)) ##

## Call:

## lm(formula = salary.assoc ~ type + n.prof * n.assoc + n.assist,

## data = Data)

##

## Residuals:

## Min 1Q Median 3Q Max

## -137.78 -39.96 -6.68 33.11 280.20

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 3.95e+02 9.95e+00 39.66 < 2e-16 ***

## typeIIA 1.70e+00 7.24e+00 0.23 0.8146

## typeIIB -2.83e+01 8.99e+00 -3.14 0.0017 **

## n.prof 3.08e-01 3.38e-02 9.12 < 2e-16 ***

## n.assoc 4.17e-01 6.67e-02 6.25 5.8e-10 ***

## n.assist -1.34e-01 6.23e-02 -2.15 0.0318 *

## n.prof:n.assoc -8.44e-04 8.65e-05 -9.76 < 2e-16 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 55.9 on 1118 degrees of freedom

## Multiple R-squared: 0.393, Adjusted R-squared: 0.389

## F-statistic: 120 on 6 and 1118 DF, p-value: <2e-16summary(m2 <- lm(salary.assoc ~ type + n.prof40*n.assoc40 + n.assist40, data = Data)) ##

## Call:

## lm(formula = salary.assoc ~ type + n.prof40 * n.assoc40 + n.assist40,

## data = Data)

##

## Residuals:

## Min 1Q Median 3Q Max

## -137.78 -39.96 -6.68 33.11 280.20

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 4.17e+02 8.09e+00 51.52 < 2e-16 ***

## typeIIA 1.70e+00 7.24e+00 0.23 0.8146

## typeIIB -2.83e+01 8.99e+00 -3.14 0.0017 **

## n.prof40 2.74e-01 3.17e-02 8.64 < 2e-16 ***

## n.assoc40 3.83e-01 6.49e-02 5.90 4.7e-09 ***

## n.assist40 -1.34e-01 6.23e-02 -2.15 0.0318 *

## n.prof40:n.assoc40 -8.44e-04 8.65e-05 -9.76 < 2e-16 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 55.9 on 1118 degrees of freedom

## Multiple R-squared: 0.393, Adjusted R-squared: 0.389

## F-statistic: 120 on 6 and 1118 DF, p-value: <2e-16Individual work

- Compare both models and interpret the estimated parameters.

- Focus on the estimated salaries for different types of university while controlling for specific amounts of the academic personel at the given university.

5. Hierarchical structure of the model

Finally, we will briefly compare a hierarchically well formulated model and a model that is non-hierarchical, Above, we already considered the model for the expected anual salary of the university professors in the USA and the proportion of the professors, associate professors and assistant professors were lowered by 40 (in order to ensure a better interpretation of the intercept parameter and better interpretation of the interaction terms).

Consider firstly a hierarchically well formulated model with and without the corresponding linear transformation of the number of professors.

summary(m3orig <- lm(salary.assoc ~ type + n.assoc * n.prof, data = Data)) ##

## Call:

## lm(formula = salary.assoc ~ type + n.assoc * n.prof, data = Data)

##

## Residuals:

## Min 1Q Median 3Q Max

## -137.78 -40.50 -6.26 33.62 283.15

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 3.92e+02 9.88e+00 39.65 < 2e-16 ***

## typeIIA 1.75e+00 7.25e+00 0.24 0.8092

## typeIIB -2.68e+01 8.98e+00 -2.99 0.0029 **

## n.assoc 3.34e-01 5.45e-02 6.13 1.2e-09 ***

## n.prof 2.91e-01 3.29e-02 8.85 < 2e-16 ***

## n.assoc:n.prof -8.24e-04 8.61e-05 -9.57 < 2e-16 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 56 on 1119 degrees of freedom

## Multiple R-squared: 0.39, Adjusted R-squared: 0.387

## F-statistic: 143 on 5 and 1119 DF, p-value: <2e-16summary(m3 <- lm(salary.assoc ~ type + n.assoc40 * n.prof40, data = Data)) ##

## Call:

## lm(formula = salary.assoc ~ type + n.assoc40 * n.prof40, data = Data)

##

## Residuals:

## Min 1Q Median 3Q Max

## -137.78 -40.50 -6.26 33.62 283.15

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 4.16e+02 8.08e+00 51.43 < 2e-16 ***

## typeIIA 1.75e+00 7.25e+00 0.24 0.8092

## typeIIB -2.68e+01 8.98e+00 -2.99 0.0029 **

## n.assoc40 3.01e-01 5.26e-02 5.73 1.3e-08 ***

## n.prof40 2.58e-01 3.09e-02 8.35 < 2e-16 ***

## n.assoc40:n.prof40 -8.24e-04 8.61e-05 -9.57 < 2e-16 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 56 on 1119 degrees of freedom

## Multiple R-squared: 0.39, Adjusted R-squared: 0.387

## F-statistic: 143 on 5 and 1119 DF, p-value: <2e-16Individual work

Both models above a hierarchically well formulated models.

- Compare the estimated parameters and try to reconstruct the parameter estimates from one model using the estimates from the second model.

- Compare summary statistics for both models (i.e., degrees of freedom, residual sum of squares, \(F\)-statistic, etc. )

Now, we will fit two very similar models but both will be non-hierarchical. Compare both models and try to understand the effect of the transformation used.

summary(m3orig <- lm(salary.assoc ~ - 1 + type + n.assoc * n.prof - n.prof, data = Data)) ##

## Call:

## lm(formula = salary.assoc ~ -1 + type + n.assoc * n.prof - n.prof,

## data = Data)

##

## Residuals:

## Min 1Q Median 3Q Max

## -141.17 -40.81 -7.94 33.81 288.50

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## typeI 4.31e+02 9.12e+00 47.27 < 2e-16 ***

## typeIIA 4.12e+02 4.87e+00 84.62 < 2e-16 ***

## typeIIB 3.71e+02 2.76e+00 134.22 < 2e-16 ***

## n.assoc 3.93e-01 5.59e-02 7.04 3.4e-12 ***

## n.assoc:n.prof -3.56e-04 7.03e-05 -5.07 4.7e-07 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 57.9 on 1120 degrees of freedom

## Multiple R-squared: 0.981, Adjusted R-squared: 0.981

## F-statistic: 1.18e+04 on 5 and 1120 DF, p-value: <2e-16summary(m3 <- lm(salary.assoc ~ - 1 + type + n.assoc40 * n.prof40 - n.prof40, data = Data)) ##

## Call:

## lm(formula = salary.assoc ~ -1 + type + n.assoc40 * n.prof40 -

## n.prof40, data = Data)

##

## Residuals:

## Min 1Q Median 3Q Max

## -140.52 -40.75 -7.79 34.72 289.97

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## typeI 4.43e+02 7.60e+00 58.28 < 2e-16 ***

## typeIIA 4.26e+02 3.50e+00 121.80 < 2e-16 ***

## typeIIB 3.87e+02 2.51e+00 153.89 < 2e-16 ***

## n.assoc40 4.05e-01 5.26e-02 7.71 2.8e-14 ***

## n.assoc40:n.prof40 -4.34e-04 7.45e-05 -5.82 7.6e-09 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 57.7 on 1120 degrees of freedom

## Multiple R-squared: 0.981, Adjusted R-squared: 0.981

## F-statistic: 1.18e+04 on 5 and 1120 DF, p-value: <2e-166. Finding suitable hierarchically well-formulated model

We will apply stepwise backward selection process and start with model containing all interactions of second order

summary(mfull <- lm(salary.assoc ~ (type + n.prof40 + n.assoc40 + n.assist40)^2, data = Data)) ##

## Call:

## lm(formula = salary.assoc ~ (type + n.prof40 + n.assoc40 + n.assist40)^2,

## data = Data)

##

## Residuals:

## Min 1Q Median 3Q Max

## -187.7 -35.8 -4.4 29.7 212.1

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 4.72e+02 1.19e+01 39.67 < 2e-16 ***

## typeIIA -4.44e+01 1.23e+01 -3.63 0.0003 ***

## typeIIB -6.57e+01 1.23e+01 -5.34 1.1e-07 ***

## n.prof40 4.09e-01 7.09e-02 5.77 1.0e-08 ***

## n.assoc40 1.30e-01 1.30e-01 1.00 0.3164

## n.assist40 -7.59e-01 1.67e-01 -4.53 6.4e-06 ***

## typeIIA:n.prof40 -2.82e-01 6.97e-02 -4.05 5.4e-05 ***

## typeIIB:n.prof40 3.60e-01 1.52e-01 2.37 0.0179 *

## typeIIA:n.assoc40 4.87e-01 1.58e-01 3.07 0.0022 **

## typeIIB:n.assoc40 7.60e-01 2.43e-01 3.13 0.0018 **

## typeIIA:n.assist40 2.60e-01 1.68e-01 1.55 0.1225

## typeIIB:n.assist40 1.05e+00 2.35e-01 4.47 8.5e-06 ***

## n.prof40:n.assoc40 -1.25e-03 2.86e-04 -4.35 1.5e-05 ***

## n.prof40:n.assist40 2.46e-04 3.23e-04 0.76 0.4458

## n.assoc40:n.assist40 1.65e-03 5.31e-04 3.11 0.0019 **

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 51.7 on 1110 degrees of freedom

## Multiple R-squared: 0.485, Adjusted R-squared: 0.478

## F-statistic: 74.7 on 14 and 1110 DF, p-value: <2e-16You can check which covariates to drop with:

drop1(mfull, test = "F")## Single term deletions

##

## Model:

## salary.assoc ~ (type + n.prof40 + n.assoc40 + n.assist40)^2

## Df Sum of Sq RSS AIC F value Pr(>F)

## <none> 2963012 8891

## type:n.prof40 2 86497 3049509 8919 16.20 1.2e-07 ***

## type:n.assoc40 2 37467 3000479 8901 7.02 0.00094 ***

## type:n.assist40 2 57827 3020839 8908 10.83 2.2e-05 ***

## n.prof40:n.assoc40 1 50555 3013567 8908 18.94 1.5e-05 ***

## n.prof40:n.assist40 1 1553 2964565 8889 0.58 0.44583

## n.assoc40:n.assist40 1 25785 2988798 8898 9.66 0.00193 **

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1We can update the model by eliminating insignificant terms:

m4 <- update(mfull, .~.-n.prof40:n.assist40)

drop1(m4, test = "F")## Single term deletions

##

## Model:

## salary.assoc ~ type + n.prof40 + n.assoc40 + n.assist40 + type:n.prof40 +

## type:n.assoc40 + type:n.assist40 + n.prof40:n.assoc40 + n.assoc40:n.assist40

## Df Sum of Sq RSS AIC F value Pr(>F)

## <none> 2964565 8889

## type:n.prof40 2 98228 3062793 8922 18.4 1.4e-08 ***

## type:n.assoc40 2 67205 3031770 8911 12.6 3.9e-06 ***

## type:n.assist40 2 57329 3021894 8907 10.7 2.4e-05 ***

## n.prof40:n.assoc40 1 55991 3020556 8908 21.0 5.2e-06 ***

## n.assoc40:n.assist40 1 34025 2998589 8900 12.8 0.00037 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1Compare different models and submodels

anova(m1, m4, test = "F") # improvement## Analysis of Variance Table

##

## Model 1: salary.assoc ~ type + n.prof40 + n.assoc40 + n.assist40

## Model 2: salary.assoc ~ type + n.prof40 + n.assoc40 + n.assist40 + type:n.prof40 +

## type:n.assoc40 + type:n.assist40 + n.prof40:n.assoc40 + n.assoc40:n.assist40

## Res.Df RSS Df Sum of Sq F Pr(>F)

## 1 1119 3792157

## 2 1111 2964565 8 827592 38.8 <2e-16 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1anova(m4, mfull, test = "F") # comparable## Analysis of Variance Table

##

## Model 1: salary.assoc ~ type + n.prof40 + n.assoc40 + n.assist40 + type:n.prof40 +

## type:n.assoc40 + type:n.assist40 + n.prof40:n.assoc40 + n.assoc40:n.assist40

## Model 2: salary.assoc ~ (type + n.prof40 + n.assoc40 + n.assist40)^2

## Res.Df RSS Df Sum of Sq F Pr(>F)

## 1 1111 2964565

## 2 1110 2963012 1 1553 0.58 0.45add1(m4, scope = "n.prof40:n.assist40", test = "F") # the same - for forward selection## Single term additions

##

## Model:

## salary.assoc ~ type + n.prof40 + n.assoc40 + n.assist40 + type:n.prof40 +

## type:n.assoc40 + type:n.assist40 + n.prof40:n.assoc40 + n.assoc40:n.assist40

## Df Sum of Sq RSS AIC F value Pr(>F)

## <none> 2964565 8889

## n.prof40:n.assist40 1 1553 2963012 8891 0.58 0.45